As we celebrate World Quality Week 2025 (November 10-14), I find myself reflecting on this year’s powerful theme: “Quality: think differently.” The Chartered Quality Institute’s call to challenge traditional approaches and embrace new ways of thinking resonates deeply with the work I’ve explored throughout the past year on my blog, investigationsquality.com. This theme isn’t just a catchy slogan—it’s an urgent imperative for pharmaceutical quality professionals navigating an increasingly complex regulatory landscape, rapid technological change, and evolving expectations for what quality systems should deliver.

The “think differently” mandate invites us to move beyond compliance theater toward quality systems that genuinely create value, build organizational resilience, and ultimately protect patients. As CQI articulates, this year’s campaign challenges us to reimagine quality not as a department or a checklist, but as a strategic mindset that shapes how we lead, build stakeholder trust, and drive organizational performance. Over the past twelve months, my writing has explored exactly this transformation—from principles-based compliance to falsifiable quality systems, from negative reasoning to causal understanding, and from reactive investigation to proactive risk management.

Let me share how the themes I’ve explored throughout 2024 and 2025 align with World Quality Week’s call to think differently about quality, drawing connections between regulatory realities, organizational challenges, and the future we’re building together.

The Regulatory Imperative: Evolving Expectations Demand New Thinking

Navigating the Evolving Landscape of Validation

My exploration of validation trends began in September 2024 with “Navigating the Evolving Landscape of Validation in Biotech,” where I analyzed the 2024 State of Validation report’s key findings. The data revealed compliance burden as the top challenge, with 83% of organizations either using or planning to adopt digital validation systems. But perhaps most tellingly, the report showed that 61% of organizations experienced increased validation workload—a clear signal that business-as-usual approaches aren’t sustainable.

By June 2025, when I revisited this topic in “Navigating the Evolving Landscape of Validation in 2025,“ the landscape had shifted dramatically. Audit readiness had overtaken compliance burden as the primary concern, marking what I called “a fundamental shift in how organizations prioritize regulatory preparedness.” This wasn’t just a statistical fluctuation—it represented validation’s evolution from a tactical compliance activity to a cornerstone of enterprise quality.

The progression from 2024 to 2025 illustrates exactly what “thinking differently” means in practice. Organizations moved from scrambling to meet compliance requirements to building systems that maintain perpetual readiness. Digital validation adoption jumped to 58% of organizations actually using these tools, with 93% either using or planning adoption. More importantly, 63% of early adopters met or exceeded ROI expectations, achieving 50% faster cycle times and reduced deviations.

This transformation demanded new mental models. As I wrote in the 2025 analysis, we need to shift from viewing validation as “a gate you pass through once” to “a state you maintain through ongoing verification.” This perfectly embodies the World Quality Week theme—moving from periodic compliance exercises to integrated systems where quality thinking drives strategy.

Computer System Assurance: Repackaging or Revolution?

One of my most provocative pieces from September 2025, “Computer System Assurance: The Emperor’s New Validation Approach,” challenged the pharmaceutical industry’s breathless embrace of CSA as revolutionary. My central argument: CSA largely repackages established GAMP principles that quality professionals have applied for over two decades, sold back to us as breakthrough innovation by consulting firms.

But here’s where “thinking differently” becomes crucial. The real revolution isn’t CSA versus CSV—it’s the shift from template-driven validation to genuinely risk-based approaches that GAMP has always advocated. Organizations with mature validation programs were already applying critical thinking, scaling validation activities appropriately, and leveraging supplier documentation effectively. They didn’t need CSA to tell them to think critically—they were already living risk-based validation principles.

The danger I identified is that CSA marketing exploits legitimate professional concerns, suggesting existing practices are inadequate when they remain perfectly sufficient. This creates what I call “compliance anxiety”—organizations worry they’re behind, consultants sell solutions to manufactured problems, and actual quality improvement gets lost in the noise.

Thinking differently here means recognizing that system quality exists on a spectrum, not as a binary state. A simple email archiving system doesn’t receive the same validation rigor as a batch manufacturing execution system—not because we’re cutting corners, but because risks are fundamentally different. This spectrum concept has been embedded in GAMP guidance for over a decade. The real work is implementing these principles consistently, not adopting new acronyms.

Regulatory Actions and Learning Opportunities

Throughout 2024-2025, I’ve analyzed numerous FDA warning letters and 483 observations as learning opportunities. In January 2025, “A Cautionary Tale from Sanofi’s FDA Warning Letter“ examined the critical importance of thorough deviation investigations. The warning letter cited persistent CGMP violations, highlighting how organizations that fail to thoroughly investigate deviations miss opportunities to identify root causes, implement effective corrective actions, and prevent recurrence.

My analysis in “From PAI to Warning Letter – Lessons from Sanofi“ traced how leak investigations became a leading indicator of systemic problems. The inspector’s initial clean bill of health for leak deviation investigations suggests either insufficient problems to reveal trends or dangerous complacency. When I published “Leaks in Single-Use Manufacturing“ in February 2025, I explored how functionally closed systems create unique contamination risks that demand heightened vigilance.

The Sanofi case illustrates a critical “think differently” principle: investigations aren’t compliance exercises—they’re learning opportunities. As I emphasized in “Scale of Remediation Under a Consent Decree,” even organizations that implement quality improvements with great enthusiasm often see those gains gradually erode. This “quality backsliding” phenomenon happens when improvements aren’t embedded in organizational culture and systematic processes.

The July 2025 Catalent 483 observation, which I analyzed in “When 483s Reveal Zemblanity,“ provided another powerful example. Twenty hair contamination deviations, seven-month delays in supplier notification, and critical equipment failures dismissed as “not impacting SISPQ” revealed what I identified as zemblanity—patterned, preventable misfortune arising from organizational design choices that quietly hardwire failure into operations. This wasn’t bad luck; it was a quality system that had normalized exactly the kinds of deviations that create inspection findings.

Risk Management: From Theater to Science

Causal Reasoning Over Negative Reasoning

In May 2025, I published “Causal Reasoning: A Transformative Approach to Root Cause Analysis,” exploring Energy Safety Canada’s white paper on moving from “negative reasoning” to “causal reasoning” in investigations. This framework profoundly aligns with pharmaceutical quality challenges.

Negative reasoning focuses on what didn’t happen—failures to follow procedures, missing controls, absent documentation. It generates findings like “operator failed to follow SOP” or “inadequate training” without understanding why those failures occurred or how to prevent them systematically. Causal reasoning, conversely, asks: What actually happened? Why did it make sense to the people involved at the time? What system conditions made this outcome likely?

This shift transforms investigations from blame exercises into learning opportunities. When we investigate twenty hair contamination deviations using negative reasoning, we conclude that operators failed to follow gowning procedures. Causal reasoning reveals that gowning procedure steps are ambiguous for certain equipment configurations, training doesn’t address real-world challenges, and production pressure creates incentives to rush.

The implications for “thinking differently” are profound. Negative reasoning produces superficial investigations that satisfy compliance requirements but fail to prevent recurrence. Causal reasoning builds understanding of how work actually happens, enabling system-level improvements that increase reliability. As I emphasized in the Catalent 483 analysis, this requires retraining investigators, implementing structured causal analysis tools, and creating cultures where understanding trumps blame.

Reducing Subjectivity in Quality Risk Management

My January 2025 piece “Reducing Subjectivity in Quality Risk Management“ addressed how ICH Q9(R1) tackles persistent challenges with subjective risk assessments. The guideline introduces a “formality continuum” that aligns effort with complexity, and emphasizes knowledge management to reduce uncertainty.

Subjectivity in risk management stems from poorly designed scoring systems, differing stakeholder perceptions, and cognitive biases. The solution isn’t eliminating human judgment—it’s structuring decision-making to minimize bias through cross-functional teams, standardized methodologies, and transparent documentation.

This connects directly to World Quality Week’s theme. Traditional risk management often becomes box-checking: complete the risk assessment template, assign severity and probability scores, document controls, and move on. Thinking differently means recognizing that the quality of risk decisions depends more on the expertise, diversity, and deliberation of the assessment team than on the sophistication of the scoring matrix.

In “Inappropriate Uses of Quality Risk Management“ (August 2024), I explored how organizations misapply risk assessment to justify predetermined conclusions rather than genuinely evaluate alternatives. This “risk management theater” undermines stakeholder trust and creates vulnerability to regulatory scrutiny. Authentic risk management requires psychological safety for raising concerns, leadership commitment to acting on risk findings, and organizational discipline to follow the risk assessment wherever it leads.

The Effectiveness Paradox and Falsifiable Quality Systems

“The Effectiveness Paradox: Why ‘Nothing Bad Happened’ Doesn’t Mean Your Controls Work“ (August 2025), examined how pharmaceutical organizations struggle to demonstrate that quality controls actually prevent problems rather than simply correlating with good outcomes.

The effectiveness paradox is simple: if your contamination control strategy works, you won’t see contamination. But if you don’t see contamination, how do you know it’s because your strategy works rather than because you got lucky? This creates what philosophers call an unfalsifiable hypothesis—a claim that can’t be tested or disproven.

The solution requires building what I call “falsifiable quality systems”—systems designed to fail predictably in ways that generate learning rather than hiding until catastrophic breakdown. This isn’t celebrating failure; it’s building intelligence into systems so that when failure occurs (as it inevitably will), it happens in controlled, detectable ways that enable improvement.

This radically different way of thinking challenges quality professionals’ instincts. We’re trained to prevent failure, not design for it. But as I discussed on The Risk Revolution podcast, see “Recent Podcast Appearance: Risk Revolution“ (September 2025), systems that never fail either aren’t being tested rigorously enough or aren’t operating in conditions that reveal their limitations. Falsifiable quality thinking embraces controlled challenges, systematic testing, and transparent learning.

Quality Culture: The Foundation of Everything

Complacency Cycles and Cultural Erosion

In February 2025, “Complacency Cycles and Their Impact on Quality Culture“ explored how complacency operates as a silent saboteur, eroding innovation and undermining quality culture foundations. I identified a four-phase cycle: stagnation (initial success breeds overconfidence), normalization of risk (minor deviations become habitual), crisis trigger (accumulated oversights culminate in failures), and temporary vigilance (post-crisis measures that fade without systemic change).

This cycle threatens every quality culture, regardless of maturity. Even organizations with strong quality systems can drift into complacency when success creates overconfidence or when operational pressures gradually normalize risk tolerance. The NASA Columbia disaster exemplified how normalized risk-taking eroded safety protocols over time—a pattern pharmaceutical quality professionals ignore at their peril.

Breaking complacency cycles demands what I call “anti-complacency practices”—systematic interventions that institutionalize vigilance. These include continuous improvement methodologies integrated into workflows, real-time feedback mechanisms that create visible accountability, and immersive learning experiences that make risks tangible. A medical device company’s “Harm Simulation Lab” that I described exposed engineers to consequences of design oversights, leading participants to identify 112% more risks in subsequent reviews compared to conventional training.

Thinking differently about quality culture means recognizing it’s not something you build once and maintain through slogans and posters. Culture requires constant nurturing through leadership behaviors, resource allocation, communication patterns, and the thousand small decisions that signal what the organization truly values. As I emphasized, quality culture exists in perpetual tension with complacency—the former pulling toward excellence, the latter toward entropy.

Equanimity: The Overlooked Foundation

“Equanimity: The Overlooked Foundation of Quality Culture“ (March 2025) explored a dimension rarely discussed in quality literature: the role of emotional stability and balanced judgment in quality decision-making. Equanimity—mental calmness and composure in difficult situations—enables quality professionals to respond to crises, navigate organizational politics, and make sound judgments under pressure.

Quality work involves constant pressure: production deadlines, regulatory scrutiny, deviation investigations, audit findings, and stakeholder conflicts. Without equanimity, these pressures trigger reactive decision-making, defensive behaviors, and risk-averse cultures that stifle improvement. Leaders who panic during audits create teams that hide problems. Professionals who personalize criticism build systems focused on blame rather than learning.

Cultivating equanimity requires deliberate practice: mindfulness approaches that build emotional regulation, psychological safety that enables vulnerability, and organizational structures that buffer quality decisions from operational pressure. When quality professionals can maintain composure while investigating serious deviations, when they can surface concerns without fear of blame, and when they can engage productively with regulators despite inspection stress—that’s when quality culture thrives.

This represents a profoundly different way of thinking about quality leadership. We typically focus on technical competence, regulatory knowledge, and process expertise. But the most technically brilliant quality professional who loses composure under pressure, who takes criticism personally, or who cannot navigate organizational politics will struggle to drive meaningful improvement. Equanimity isn’t soft skill window dressing—it’s foundational to quality excellence.

Building Operational Resilience Through Cognitive Excellence

My August 2025 piece “Building Operational Resilience Through Cognitive Excellence“ connected quality culture to operational resilience by examining how cognitive limitations and organizational biases inhibit comprehensive hazard recognition. Research demonstrates that organizations with strong risk management cultures are significantly less likely to experience damaging operational risk events.

The connection is straightforward: quality culture determines how organizations identify, assess, and respond to risks. Organizations with mature cultures demonstrate superior capability in preventing issues, detecting problems early, and implementing effective corrective actions addressing root causes. Recent FDA warning letters consistently identify cultural deficiencies underlying technical violations—insufficient Quality Unit authority, inadequate management commitment, systemic failures in risk identification and escalation.

Cognitive excellence in quality requires multiple capabilities: pattern recognition that identifies weak signals before they become crises, systems thinking that traces cascading effects, and decision-making frameworks that manage uncertainty without paralysis. Organizations build these capabilities through training, structured methodologies, cross-functional collaboration, and cultures that value inquiry over certainty.

This aligns perfectly with World Quality Week’s call to think differently. Traditional quality approaches focus on documenting what we know, following established procedures, and demonstrating compliance. Cognitive excellence demands embracing what we don’t know, questioning established assumptions, and building systems that adapt as understanding evolves. It’s the difference between quality systems that maintain stability and quality systems that enable growth.

The Digital Transformation Imperative

Throughout 2024-2025, I’ve tracked digital transformation’s impact on pharmaceutical quality. The Draft EU GMP Chapter 4 (2025), which I analyzed in multiple posts, formalizes ALCOA++ principles as the foundation for data integrity. This represents the first comprehensive regulatory codification of expanded data integrity principles: Attributable, Legible, Contemporaneous, Original, Accurate, Complete, Consistent, Enduring, and Available.

In “Draft Annex 11 Section 10: ‘Handling of Data‘” (July 2025), I emphasized that bringing controls into compliance with Section 10 is a strategic imperative. Organizations that move fastest will spend less effort in the long run, while those who delay face mounting technical debt and compliance risk. The draft Annex 11 introduces sophisticated requirements for identity and access management (IAM), representing what I called “a complete philosophical shift from ‘trust but verify’ to ‘prove everything, everywhere, all the time.'”

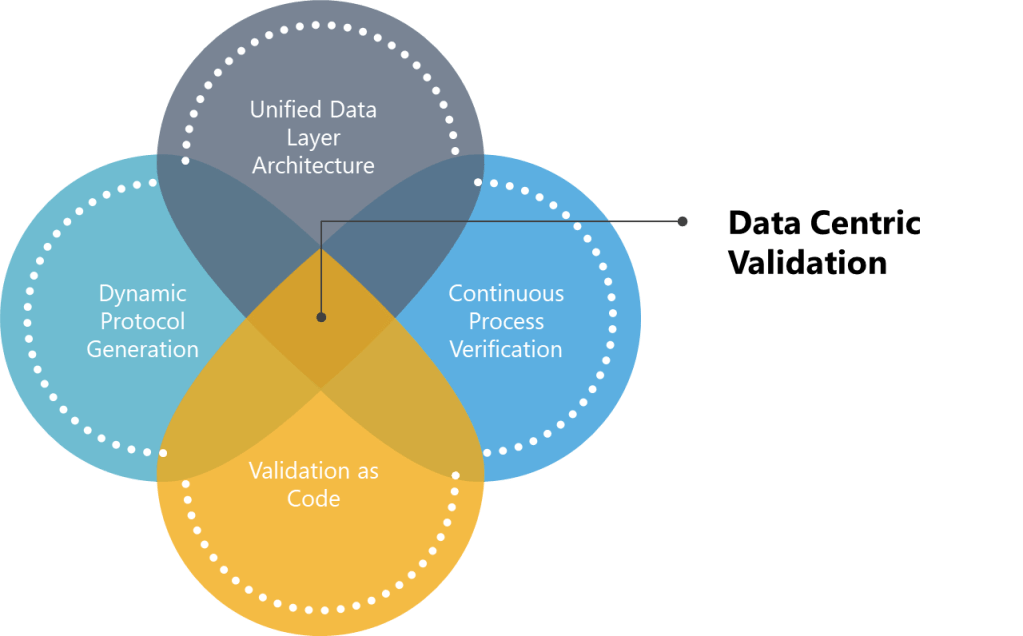

The validation landscape shows similar digital acceleration. As I documented in the 2025 State of Validation analysis, 93% of organizations either use or plan to adopt digital validation systems. Continuous Process Verification has emerged as a cornerstone, with IoT sensors and real-time analytics enabling proactive quality management. By aligning with ICH Q10’s lifecycle approach, CPV transforms validation from compliance exercise to strategic asset.

But technology alone doesn’t constitute “thinking differently.” In “Section 4 of Draft Annex 11: Quality Risk Management“ (August 2025), I argued that the section serves as philosophical and operational backbone for everything else in the regulation. Every validation decision must be traceable to specific risk assessments considering system characteristics and GMP role. This risk-based approach rewards organizations investing in comprehensive assessment while penalizing those relying on generic templates.

The key insight: digital tools amplify whatever thinking underlies their use. Digital validation systems applied with template mentality simply automate bad practices. But digital tools supporting genuinely risk-based, scientifically justified approaches enable quality management impossible with paper systems—real-time monitoring, predictive analytics, integrated data analysis, and adaptive control strategies.

Artificial Intelligence: Promise and Peril

In September 2025, “The Expertise Crisis: Why AI’s War on Entry-Level Jobs Threatens Quality’s Future“ explored how pharmaceutical organizations rushing to harness AI risk creating an expertise crisis threatening quality foundations. Research showing 13% decline in entry-level opportunities for young workers since AI deployment reveals a dangerous trend.

The false economy of AI substitution misunderstands how expertise develops. Senior risk management professionals reviewing contamination events can quickly identify failure modes because they developed foundational expertise through years investigating routine deviations, participating in CAPA teams, and learning to distinguish significant risks from minor variations. When AI handles initial risk assessments and senior professionals review only outputs, we create expertise hollowing—organizations that appear capable superficially but lack deep competency for complex challenges.

This connects to World Quality Week’s theme through a critical question: Are we thinking differently about quality in ways that build capability, or are we simply automating away the learning opportunities that create expertise? As I argued, the choice between eliminating entry-level positions and redesigning them to maximize learning value while leveraging AI appropriately will determine whether we have quality professionals capable of maintaining systems in 2035.

The regulatory landscape is adapting. My July 2025 piece “Regulatory Changes I am Watching“ documented multiple agencies publishing AI guidance. The EMA’s reflection paper, MHRA’s AI regulatory strategy, and EFPIA’s position on AI in GMP manufacturing all emphasize risk-based approaches requiring transparency, validation, and ongoing performance monitoring. The message is clear: AI is a tool requiring human oversight, not a replacement for human judgment.

Data Integrity: The Non-Negotiable Foundation

ALCOA++ as Strategic Asset

Data integrity has been a persistent theme throughout my writing. As I emphasized in the 2025 validation analysis, “we are only as good as our data” encapsulates the existential reality of regulated industries. The ALCOA++ framework provides architectural blueprint for embedding data integrity into every quality system layer.

In “Pillars of Good Data“ (October 2024), I explored how data governance, data quality, and data integrity work together creating robust data management. Data governance establishes policies and accountabilities. Data quality ensures fitness for use. Data integrity ensures trustworthiness through controls preventing and detecting data manipulation, loss, or compromise.

These pillars support continuous improvement cycles: governance policies inform quality and integrity standards, assessments provide feedback on governance effectiveness, and feedback refines policies enhancing practices. Organizations treating these concepts as separate compliance activities miss the synergistic relationship enabling truly robust data management.

The Draft Chapter 4 analysis revealed how data integrity requirements have evolved from general principles to specific technical controls. Hybrid record systems (paper plus electronic) require demonstrable tamper-evidence through hashes or equivalent mechanisms. Electronic signature requirements demand multi-factor authentication, time-zoned audit trails, and explicit non-repudiation provisions. Open systems like SaaS platforms require compliance with standards like eIDAS for trusted digital providers.

Thinking differently about data integrity means moving from reactive remediation (responding to inspector findings) to proactive risk assessment (identifying vulnerabilities before they’re exploited). In my analysis of multiple warning letters throughout 2024-2025, data integrity failures consistently appeared alongside other quality system weaknesses—inadequate investigations, insufficient change control, poor CAPA effectiveness. Data integrity isn’t standalone compliance—it’s quality system litmus test revealing organizational discipline, technical capability, and cultural commitment.

The Problem with High-Level Requirements

In August 2025, “The Problem with High-Level Regulatory User Requirements“ examined why specifying “Meet Part 11” as a user requirement is bad form. High-level requirements like this don’t tell implementers what the system must actually do—they delegate regulatory interpretation to vendors and implementation teams without organization-specific context.

Effective requirements translate regulatory expectations into specific, testable, implementable system behaviors: “System shall enforce unique user IDs that cannot be reassigned,” “System shall record complete audit trail including user ID, date, time, action type, and affected record identifier,” “System shall prevent modification of closed records without documented change control approval.” These requirements can be tested, verified, and traced to specific regulatory citations.

This illustrates broader “think differently” principle: compliance isn’t achieved by citing regulations—it’s achieved by understanding what regulations require in your specific context and building capabilities delivering those requirements. Organizations treating compliance as regulatory citation exercise miss the substance of what regulation demands. Deep understanding enables defensible, effective compliance; superficial citation creates vulnerability to inspectional findings and quality failures.

Process Excellence and Organizational Design

Process Mapping and Business Process Management

Between November 2024 and May 2025, I published a series exploring process management fundamentals. “Process Mapping as a Scaling Solution (part 1)“ and subsequent posts examined how process mapping, SIPOC analysis, value chain models, and BPM frameworks enable organizational scaling while maintaining quality.

The key insight: BPM functions as both adaptive framework and prescriptive methodology, with process architecture connecting strategic vision to operational reality. Organizations struggling with quality issues often lack clear process understanding—roles ambiguous, handoffs undefined, decision authority unclear. Process mapping makes implicit work visible, enabling systematic improvement.

But mapping alone doesn’t create excellence. As I explored in “SIPOC“ (May 2025), the real power comes from integrating multiple perspectives—strategic (value chain), operational (SIPOC), and tactical (detailed process maps)—into coherent understanding of how work flows. This enables targeted interventions: if raw material shortages plague operations, SIPOC analysis reveals supplier relationships and bottlenecks requiring operational-layer solutions. If customer satisfaction declines, value chain analysis identifies strategic-layer misalignment requiring service redesign.

This connects to “thinking differently” through systems thinking. Traditional quality approaches focus on local optimization—making individual departments or processes more efficient. Process architecture thinking recognizes that local optimization can create global problems if process interdependencies aren’t understood. Sometimes making one area more efficient creates bottlenecks elsewhere or reduces overall system effectiveness. Systems-level understanding enables genuine optimization.

Organizational Structure and Competency

Several pieces explored organizational excellence foundations. “Building a Competency Framework for Quality“ (April 2025) examined how defining clear competencies for quality roles enables targeted development, objective assessment, and succession planning. Without competency frameworks, training becomes ad hoc, capability gaps remain invisible, and organizational knowledge concentrates in individuals rather than systems.

“The Minimal Viable Risk Assessment Team“ (June 2025) addressed what ineffective risk management actually costs. Beyond obvious impacts like unidentified risks and poorly prioritized resources, ineffective risk management generates rework, creates regulatory findings, erodes stakeholder trust, and perpetuates organizational fragility. Building minimum viable teams requires clear role definitions, diverse expertise, defined decision-making processes, and systematic follow-through.

In “The GAMP5 System Owner and Process Owner and Beyond“, I explored how defining accountable individuals in processes is critical for quality system effectiveness. System owners and process owners provide single points of accountability, enable efficient decision-making, and ensure processes have champions driving improvement. Without clear ownership, responsibilities diffuse, problems persist, and improvement initiatives stall.

These organizational elements—competency frameworks, team structures, clear accountabilities—represent infrastructure enabling quality excellence. Organizations can have sophisticated processes and advanced technologies, but without people who know what they’re doing, teams structured for success, and clear accountability for outcomes, quality remains aspirational rather than operational.

Looking Forward: The Quality Professional’s Mandate

As World Quality Week 2025 challenges us to think differently about quality, what does this mean practically for pharmaceutical quality professionals?

First, it means embracing discomfort with certainty. Quality has traditionally emphasized control, predictability, and adherence to established practices. Thinking differently requires acknowledging uncertainty, questioning assumptions, and adapting as we learn. This doesn’t mean abandoning scientific rigor—it means applying that rigor to examining our own assumptions and biases.

Second, it demands moving from compliance focus to value creation. Compliance is necessary but insufficient. As I’ve argued throughout the year, quality systems should protect patients, yes—but also enable innovation, build organizational capability, and create competitive advantage. When quality becomes enabling force rather than constraint, organizations thrive.

Third, it requires building systems that learn. Traditional quality approaches document what we know and execute accordingly. Learning quality systems actively test assumptions, detect weak signals, adapt to new information, and continuously improve understanding. Falsifiable quality systems, causal investigation approaches, and risk-based thinking all contribute to learning organizational capacity.

Fourth, it necessitates cultural transformation alongside technical improvement. Every technical quality challenge has cultural dimensions—how people communicate, how decisions get made, how problems get raised, how learning happens. Organizations can implement sophisticated technologies and advanced methodologies, but without cultures supporting those tools, sustainable improvement remains elusive.

Finally, thinking differently about quality means embracing our role as organizational change agents. Quality professionals can’t wait for permission to improve systems, challenge assumptions, or drive transformation. We must lead these changes, making the case for new approaches, building coalitions, and demonstrating value. World Quality Week provides platform for this leadership—use it.

The Quality Beat

In my August 2025 piece “Finding Rhythm in Quality Risk Management,” I explored how predictable rhythms in quality activities—regular assessment cycles, structured review processes, systematic verification—create stable foundations enabling innovation. The paradox is that constraint enables creativity—teams knowing they have regular, structured opportunities for risk exploration are more willing to raise difficult questions and propose unconventional solutions.

This captures what thinking differently about quality truly means. It’s not abandoning structure for chaos, or replacing discipline with improvisation. It’s finding our quality beat—the rhythm at which our organizations can sustain excellence, the cadence enabling both stability and adaptation, the tempo at which learning and execution harmonize.

World Quality Week 2025 invites us to discover that rhythm in our own contexts. The themes I’ve explored throughout 2024 and 2025—from causal reasoning to falsifiable systems, from complacency cycles to cognitive excellence, from digital transformation to expertise development—all contribute to quality excellence that goes beyond compliance to create genuine value.

As we celebrate the people, ideas, and practices shaping quality’s future, let’s commit to more than celebration. Let’s commit to transformation—in our systems, our organizations, our profession, and ourselves. Quality’s golden thread runs throughout business because quality professionals weave it there, one decision at a time, one system at a time, one transformation at a time.

The future of quality isn’t something that happens to us. It’s something we create by thinking differently, acting deliberately, and leading courageously. Let’s make World Quality Week 2025 the moment we choose that future together.