How the Quality Industry Repackaged Existing Practices and Called Them Revolutionary

As someone who has spent decades implementing computer system validation practices across multiple regulated environments, I consistently find myself skeptical of the breathless excitement surrounding Computer System Assurance (CSA). The pharmaceutical quality community’s enthusiastic embrace of CSA as a revolutionary departure from traditional Computer System Validation (CSV) represents a troubling case study in how our industry allows consultants to rebrand established practices as breakthrough innovations, selling back to us concepts we’ve been applying for over two decades.

The truth is both simpler and more disappointing than the CSA evangelists would have you believe: there is nothing fundamentally new in computer system assurance that wasn’t already embedded in risk-based validation approaches, GAMP5 principles, or existing regulatory guidance. What we’re witnessing is not innovation, but sophisticated marketing—a coordinated effort to create artificial urgency around “modernizing” validation practices that were already fit for purpose.

The Historical Context: Why We Need to Remember Where We Started

To understand why CSA represents more repackaging than revolution, we must revisit the regulatory and industry context from which our current validation practices emerged. Computer system validation didn’t develop in a vacuum—it arose from genuine regulatory necessity in response to real-world failures that threatened patient safety and product quality.

The origins of systematic software validation in regulated industries trace back to military applications in the 1960s, specifically independent verification and validation (IV&V) processes developed for critical defense systems. The pharmaceutical industry’s adoption of these concepts began in earnest during the 1970s as computerized systems became more prevalent in drug manufacturing and quality control operations.

The regulatory foundation for what we now call computer system validation was established through a series of FDA guidance documents throughout the 1980s and 1990s. The 1983 FDA “Guide to Inspection of Computerized Systems in Drug Processing” represented the first systematic approach to ensuring the reliability of computer-based systems in pharmaceutical manufacturing. This was followed by increasingly sophisticated guidance, culminating in 21 CFR Part 11 in 1997 and the “General Principles of Software Validation” in 2002.

These regulations didn’t emerge from academic theory—they were responses to documented failures. The FDA’s analysis of 3,140 medical device recalls between 1992 and 1998 revealed that 242 (7.7%) were attributable to software failures, with 192 of those (79%) caused by defects introduced during software changes after initial deployment. Computer system validation developed as a systematic response to these real-world risks, not as an abstract compliance exercise.

The GAMP Evolution: Building Risk-Based Practices from the Ground Up

Perhaps no single development better illustrates how the industry has already solved the problems CSA claims to address than the evolution of the Good Automated Manufacturing Practice (GAMP) guidelines. GAMP didn’t start as a theoretical framework—it emerged from practical necessity when FDA inspectors began raising concerns about computer system validation during inspections of UK pharmaceutical facilities in 1991

The GAMP community’s response was methodical and evidence-based. Rather than creating bureaucratic overhead, GAMP sought to provide a practical framework that would satisfy regulatory requirements while enabling business efficiency. Each revision of GAMP incorporated lessons learned from real-world implementations:

GAMP 1 (1994) focused on standardizing validation activities for computerized systems, addressing the inconsistency that characterized early validation efforts.

GAMP 2 and 3 (1995-1998) introduced early concepts of risk-based approaches and expanded scope to include IT infrastructure, recognizing that validation needed to be proportional to risk rather than uniformly applied.

GAMP 4 (2001) emphasized a full system lifecycle model and defined clear validation deliverables, establishing the structured approach that remains fundamentally unchanged today.

GAMP 5 (2008) represented a decisive shift toward risk-based validation, promoting scalability and efficiency while maintaining regulatory compliance. This version explicitly recognized that validation effort should be proportional to the system’s impact on product quality, patient safety, and data integrity.

The GAMP 5 software categorization system (Categories 1, 3, 4, and 5, with Category 2 eliminated as obsolete) provided the risk-based framework that CSA proponents now claim as innovative. A Category 1 infrastructure software requires minimal validation beyond verification of installation and version control, while a Category 5 custom application demands comprehensive lifecycle validation including detailed functional and design specifications. This isn’t just risk-based thinking—it’s risk-based practice that has been successfully implemented across thousands of systems for over fifteen years.

The Risk-Based Spectrum: What GAMP Already Taught Us

One of the most frustrating aspects of CSA advocacy is how it presents risk-based validation as a novel concept. The pharmaceutical industry has been applying risk-based approaches to computer system validation since the early 2000s, not as a revolutionary breakthrough, but as basic professional competence.

The foundation of risk-based validation rests on a simple principle: validation rigor should be proportional to the potential impact on product quality, patient safety, and data integrity. This principle was explicitly articulated in ICH Q9 (Quality Risk Management) and embedded throughout GAMP 5, creating what is effectively a validation spectrum rather than a binary validated/not-validated state.

At the lower end of this spectrum, we find systems with minimal GMP impact—infrastructure software, standard office applications used for non-GMP purposes, and simple monitoring tools that generate no critical data. For these systems, validation consists primarily of installation verification and fitness-for-use confirmation, with minimal documentation requirements.

In the middle of the spectrum are configurable commercial systems—LIMS, ERP modules, and manufacturing execution systems that require configuration to meet specific business needs. These systems demand functional testing of configured elements, user acceptance testing, and ongoing change control, but can leverage supplier documentation and industry standard practices to streamline validation efforts.

At the high end of the spectrum are custom applications and systems with direct impact on batch release decisions, patient safety, or regulatory submissions. These systems require comprehensive validation including detailed functional specifications, extensive testing protocols, and rigorous change control procedures.

The elegance of this approach is that it scales validation effort appropriately while maintaining consistent quality outcomes. A risk assessment determines where on the spectrum a particular system falls, and validation activities align accordingly. This isn’t theoretical—it’s been standard practice in well-run validation programs for over a decade.

The 2003 FDA Guidance: The CSA Framework Hidden in Plain Sight

Perhaps the most damning evidence that CSA represents repackaging rather than innovation lies in the 2003 FDA guidance “Part 11, Electronic Records; Electronic Signatures — Scope and Application.” This guidance, issued over twenty years ago, contains virtually every principle that CSA advocates now present as revolutionary insights.

The 2003 guidance established several critical principles that directly anticipate CSA approaches:

- Narrow Scope Interpretation: The FDA explicitly stated that Part 11 would only be enforced for records required to be kept where electronic versions are used in lieu of paper, avoiding the over-validation that characterized early Part 11 implementations.

- Risk-Based Enforcement: Rather than treating Part 11 as a checklist, the FDA indicated that enforcement priorities would be risk-based, focusing on systems where failures could compromise data integrity or patient safety.

- Legacy System Pragmatism: The guidance exercised discretion for systems implemented before 1997, provided they were fit for purpose and maintained data integrity.

- Focus on Predicate Rules: Companies were encouraged to focus on fulfilling underlying regulatory requirements rather than treating Part 11 as an end in itself.

- Innovation Encouragement: The guidance explicitly stated that “innovation should not be stifled” by fear of Part 11, encouraging adoption of new technologies provided they maintained appropriate controls.

These principles—narrow scope, risk-based approach, pragmatic implementation, focus on underlying requirements, and innovation enablement—constitute the entire conceptual framework that CSA now claims as its contribution to validation thinking. The 2003 guidance didn’t just anticipate CSA; it embodied CSA principles in FDA policy over two decades before the “Computer Software Assurance” marketing campaign began.

The EU Annex 11 Evolution: Proof That the System Was Already Working

The evolution of EU GMP Annex 11 provides another powerful example of how existing regulatory frameworks have continuously incorporated the principles that CSA now claims as innovations. The current Annex 11, dating from 2011, already included most elements that CSA advocates present as breakthrough thinking.

The original Annex 11 established several key principles that remain relevant today:

- Risk-Based Validation: Clause 1 requires that “Risk management should be applied throughout the lifecycle of the computerised system taking into account patient safety, data integrity and product quality”—a clear articulation of risk-based thinking.

- Supplier Assessment: The regulation required assessment of suppliers and their quality systems, anticipating the “trusted supplier” concepts that CSA emphasizes.

- Lifecycle Management: Annex 11 required that systems be validated and maintained in a validated state throughout their operational life.

- Change Control: The regulation established requirements for managing changes to validated systems.

- Data Integrity: Electronic records requirements anticipated many of the data integrity concerns that now drive validation practices.

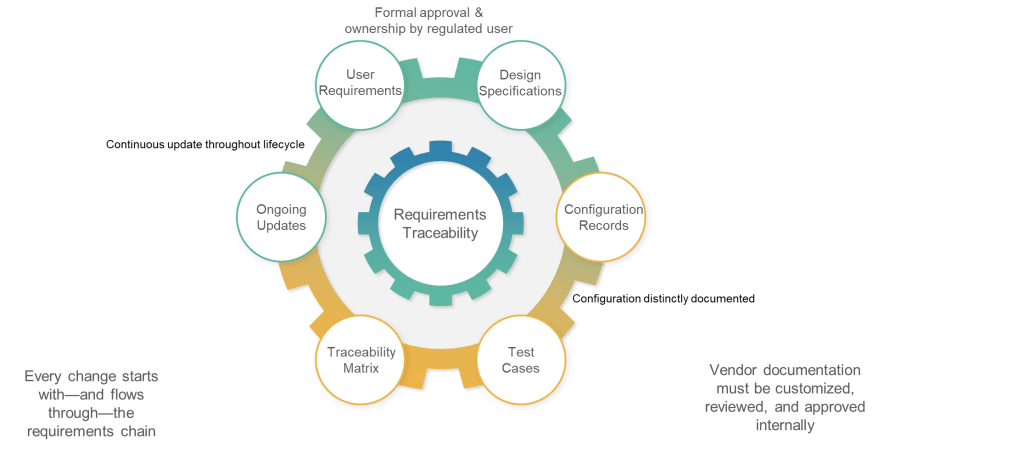

The 2025 draft revision of Annex 11 represents evolution, not revolution. While the document has expanded significantly, most additions address technological developments—cloud computing, artificial intelligence, cybersecurity—rather than fundamental changes in validation philosophy. The core principles remain unchanged: risk-based validation, lifecycle management, supplier oversight, and data integrity protection.

Importantly, the draft Annex 11 demonstrates regulatory convergence rather than divergence. The revision aligns more closely with FDA CSA guidance, GAMP 5 second edition, ICH Q9, and ISO 27001. This alignment doesn’t validate CSA as revolutionary—it demonstrates that global regulators recognize the maturity and effectiveness of existing validation approaches.

The FDA CSA Final Guidance: Official Release and the Repackaging of Established Principles

On September 24, 2025, the FDA officially published its final guidance on “Computer Software Assurance for Production and Quality System Software,” marking the culmination of a three-year journey from draft to final policy. This final guidance, while presented as a modernization breakthrough by consulting industry advocates, provides perhaps the clearest evidence yet that CSA represents sophisticated rebranding rather than genuine innovation.

The Official Position: Supplement, Not Revolution

The FDA’s own language reveals the evolutionary rather than revolutionary nature of CSA. The guidance explicitly states that it “supplements FDA’s guidance, ‘General Principles of Software Validation'” with one notable exception: “this guidance supersedes Section 6: Validation of Automated Process Equipment and Quality System Software of the Software Validation guidance”.

This measured approach directly contradicts the consulting industry narrative that positions CSA as a wholesale replacement for traditional validation approaches. The FDA is not abandoning established software validation principles—it is refining their application to production and quality system software while maintaining the fundamental framework that has served the industry effectively for over two decades.

What Actually Changed: Evolutionary Refinement

The final guidance incorporates several refinements that demonstrate the FDA’s commitment to practical implementation rather than theoretical innovation:

Risk-Based Framework Formalization: The guidance provides explicit criteria for determining “high process risk” versus “not high process risk” software functions, creating a binary classification system that simplifies risk assessment while maintaining proportionate validation effort. However, this risk-based thinking merely formalizes the spectrum approach that mature GAMP implementations have applied for years.

Cloud Computing Integration: The guidance addresses Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) deployments, providing clarity on when cloud-based systems require validation. This represents adaptation to technological evolution rather than philosophical innovation—the same risk-based principles apply regardless of deployment model.

Unscripted Testing Validation: The guidance explicitly endorses “unscripted testing” as an acceptable validation approach, encouraging “exploratory, ad hoc, and unscripted testing methods” when appropriate. This acknowledgment of testing methods that experienced practitioners have used for years represents regulatory catch-up rather than breakthrough thinking.

Digital Evidence Acceptance: The guidance states that “FDA recommends incorporating the use of digital records and digital signature capabilities rather than duplicating results already digitally retained,” providing regulatory endorsement for practices that reduce documentation burden. Again, this formalizes efficiency measures that sophisticated organizations have implemented within existing frameworks.

The Definitional Games: CSA Versus CSV

The final guidance provides perhaps the most telling evidence of CSA’s repackaging nature through its definition of Computer Software Assurance: “a risk-based approach for establishing and maintaining confidence that software is fit for its intended use”. This definition could have been applied to effective computer system validation programs throughout the past two decades without modification.

The guidance emphasizes that CSA “follows a least-burdensome approach, where the burden of validation is no more than necessary to address the risk”. This principle was explicitly articulated in ICH Q9 (Quality Risk Management) published in 2005 and embedded in GAMP 5 guidance from 2008. The FDA is not introducing least-burdensome thinking—it is providing regulatory endorsement for principles that the industry has applied successfully for over fifteen years.

More significantly, the guidance acknowledges that CSA “establishes and maintains that the software used in production or the quality system is in a state of control throughout its life cycle (‘validated state’)”. The concept of maintaining validated state through lifecycle management represents core computer system validation thinking that predates CSA by decades.

Practical Examples: Repackaged Wisdom

The final guidance includes four detailed examples in Appendix A that demonstrate CSA application to real-world scenarios: Nonconformance Management Systems, Learning Management Systems, Business Intelligence Applications, and Software as a Service (SaaS) Product Life Cycle Management Systems. These examples provide valuable practical guidance, but they illustrate established validation principles rather than innovative approaches.

Consider the Nonconformance Management System example, which demonstrates risk assessment, supplier evaluation, configuration testing, and ongoing monitoring. Each element represents standard GAMP-based validation practice:

- Risk Assessment: Determining that failure could impact product quality aligns with established risk-based validation principles

- Supplier Evaluation: Assessing vendor development practices and quality systems follows GAMP supplier guidance

- Configuration Testing: Verifying that system configuration meets business requirements represents basic user acceptance testing

- Ongoing Monitoring: Maintaining validated state through change control and periodic review embodies lifecycle management concepts

The Business Intelligence Applications example similarly demonstrates established practices repackaged with CSA terminology. The guidance recommends focusing validation effort on “data integrity, accuracy of calculations, and proper access controls”—core concerns that experienced validation professionals have addressed routinely using GAMP principles.

The Regulatory Timing: Why Now?

The timing of the final CSA guidance publication reveals important context about regulatory motivation. The guidance development began in earnest in 2022, coinciding with increasing industry pressure to address digital transformation challenges, cloud computing adoption, and artificial intelligence integration in manufacturing environments.

However, the three-year development timeline suggests careful consideration rather than urgent need for wholesale validation reform. If existing validation approaches were fundamentally inadequate, we would expect more rapid regulatory response to address patient safety concerns. Instead, the measured development process indicates that the FDA recognized the adequacy of existing approaches while seeking to provide clearer guidance for emerging technologies.

The final guidance explicitly states that FDA “believes that applying a risk-based approach to computer software used as part of production or the quality system would better focus manufacturers’ quality assurance activities to help ensure product quality while helping to fulfill validation requirements”. This language acknowledges that existing approaches fulfill regulatory requirements—the guidance aims to optimize resource allocation rather than address compliance failures.

The Consulting Industry’s Role in Manufacturing Urgency

To understand why CSA has gained traction despite offering little genuine innovation, we must examine the economic incentives that drive consulting industry behavior. The computer system validation consulting market represents hundreds of millions of dollars annually, with individual validation projects ranging from tens of thousands to millions of dollars depending on system complexity and organizational scope.

This market faces a fundamental problem: mature practices don’t generate consulting revenue. If organizations understand that their current GAMP-based validation approaches are fundamentally sound and regulatory-compliant, they’re less likely to engage consultants for expensive “modernization” projects. CSA provides the solution to this problem by creating artificial urgency around practices that were already fit for purpose.

The CSA marketing campaign follows a predictable pattern that the consulting industry has used repeatedly across different domains:

Step 1: Problem Creation. Traditional CSV is portrayed as outdated, burdensome, and potentially non-compliant with evolving regulatory expectations. This creates anxiety among quality professionals who fear falling behind industry best practices.

Step 2: Solution Positioning. CSA is presented as the modern, efficient, risk-based alternative that leading organizations are already adopting. Early adopters are portrayed as innovative leaders, while traditional practitioners risk being perceived as laggards.

Step 3: Urgency Amplification. Regulatory changes (like the Annex 11 revision) are leveraged to suggest that traditional approaches may become non-compliant, requiring immediate action.

Step 4: Capability Marketing. Consulting firms position themselves as experts in the “new” CSA approach, offering training, assessment services, and implementation support for organizations seeking to “modernize” their validation practices.

This pattern is particularly insidious because it exploits legitimate professional concerns. Quality professionals genuinely want to ensure their practices remain current and effective. However, the CSA campaign preys on these concerns by suggesting that existing practices are inadequate when, in fact, they remain perfectly sufficient for regulatory compliance and business effectiveness.

The False Dichotomy: CSV Versus CSA

Perhaps the most misleading aspect of CSA promotion is the suggestion that organizations must choose between “traditional CSV” and “modern CSA” approaches. This creates a false dichotomy that obscures the reality: well-implemented GAMP-based validation programs already incorporate every principle that CSA advocates as innovative.

Consider the claimed distinctions between CSV and CSA:

- Critical Thinking Over Documentation: CSA proponents suggest that traditional CSV focuses on documentation production rather than system quality. However, GAMP 5 has emphasized risk-based thinking and proportionate documentation for over fifteen years. Organizations producing excessive documentation were implementing GAMP poorly, not following its actual guidance.

- Testing Over Paperwork: The claim that CSA prioritizes testing effectiveness over documentation completeness misrepresents both approaches. GAMP has always emphasized that validation should provide confidence in system performance, not just documentation compliance. The GAMP software categories explicitly scale testing requirements to risk levels.

- Automation and Modern Technologies: CSA advocates present automation and advanced testing methods as CSA innovations. However, Annex 11 Clause 4.7 has required consideration of automated testing tools since 2011, and GAMP 5 second edition explicitly addresses agile development, cloud computing, and artificial intelligence.

- Risk-Based Resource Allocation: The suggestion that CSA introduces risk-based resource allocation ignores decades of GAMP implementation where validation effort is explicitly scaled to system risk and business impact.

- Supplier Leverage: CSA emphasis on leveraging supplier documentation and testing is presented as innovative thinking. However, GAMP has advocated supplier assessment and documentation leverage since its early versions, with detailed guidance on when and how to rely on supplier work.

The reality is that organizations with mature, well-implemented validation programs are already applying CSA principles without recognizing them as such. They conduct risk assessments, scale validation activities appropriately, leverage supplier documentation effectively, and focus resources on high-impact systems. They didn’t need CSA to tell them to think critically—they were already applying critical thinking to validation challenges.

The Spectrum Reality: Quality as a Continuous Variable

One of the most important concepts that both GAMP and effective validation practice have always recognized is that system quality exists on a spectrum, not as a binary state. Systems aren’t simply “validated” or “not validated”—they exist at various points along a continuum of validation rigor that corresponds to their risk profile and business impact.

This spectrum concept directly contradicts the CSA marketing message that suggests traditional validation approaches treat all systems identically. In reality, experienced validation professionals have always applied different approaches to different system types.

This spectrum approach enables organizations to allocate validation resources effectively while maintaining appropriate controls. A simple email archiving system doesn’t receive the same validation rigor as a batch manufacturing execution system—not because we’re cutting corners, but because the risks are fundamentally different.

CSA doesn’t introduce this spectrum concept—it restates principles that have been embedded in GAMP guidance for over a decade. The suggestion that traditional validation approaches lack risk-based thinking demonstrates either ignorance of GAMP principles or deliberate misrepresentation of current practices.

Regulatory Convergence: Proof of Existing Framework Maturity

The convergence of global regulatory approaches around risk-based validation principles provides compelling evidence that existing frameworks were already effective and didn’t require CSA “modernization.” The 2025 draft Annex 11 revision demonstrates this convergence clearly.

Key aspects of the draft revision align closely with established GAMP principles:

- Risk Management Integration: Section 6 requires risk management throughout the system lifecycle, aligning with ICH Q9 and existing GAMP guidance.

- Lifecycle Perspective: Section 4 emphasizes lifecycle management from planning through retirement, consistent with GAMP lifecycle models.

- Supplier Oversight: Section 7 requires supplier qualification and ongoing assessment, building on existing GAMP supplier guidance.

- Security Integration: Section 15 addresses cybersecurity as a GMP requirement, reflecting technological evolution rather than philosophical change.

- Periodic Review: Section 14 mandates periodic system review, formalizing practices that mature organizations already implement.

This alignment doesn’t validate CSA as revolutionary—it demonstrates that global regulators recognize the effectiveness of existing risk-based validation approaches and are codifying them more explicitly. The fact that CSA principles align with regulatory evolution proves that these principles were already embedded in effective validation practice.

The finalized FDA guidance fits into this by providing educational clarity for validation professionals who have struggled to apply risk-based principles effectively. The detailed examples and explicit risk classification criteria offer practical guidance that can improve validation program implementation. This is not a call by the FDA for radical changes, it is an educational moment on the current consensus.

The Technical Reality: What Actually Drives System Quality

Beneath the consulting industry rhetoric about CSA lies a more fundamental question: what actually drives computer system quality in regulated environments? The answer has remained consistent across decades of validation practice and won’t change regardless of whether we call our approach CSV, CSA, or any other acronym.

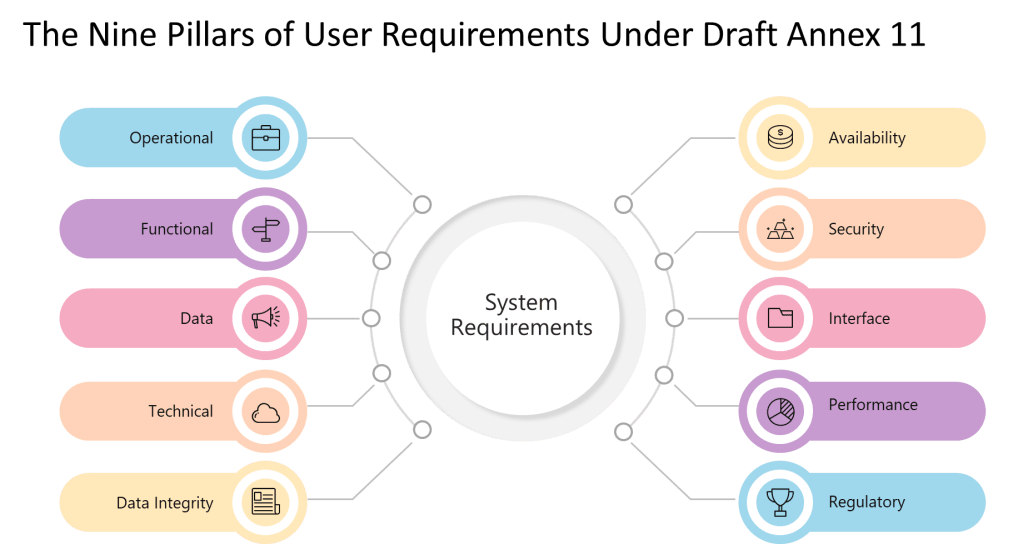

System quality derives from several key factors that transcend validation methodology:

- Requirements Definition: Systems must be designed to meet clearly defined user requirements that align with business processes and regulatory obligations. Poor requirements lead to poor systems regardless of validation approach.

- Supplier Competence: The quality of the underlying software depends fundamentally on the supplier’s development practices, quality systems, and technical expertise. Validation can detect defects but cannot create quality that wasn’t built into the system.

- Configuration Control: Proper configuration of commercial systems requires deep understanding of both the software capabilities and the business requirements. Poor configuration creates risks that no amount of validation testing can eliminate.

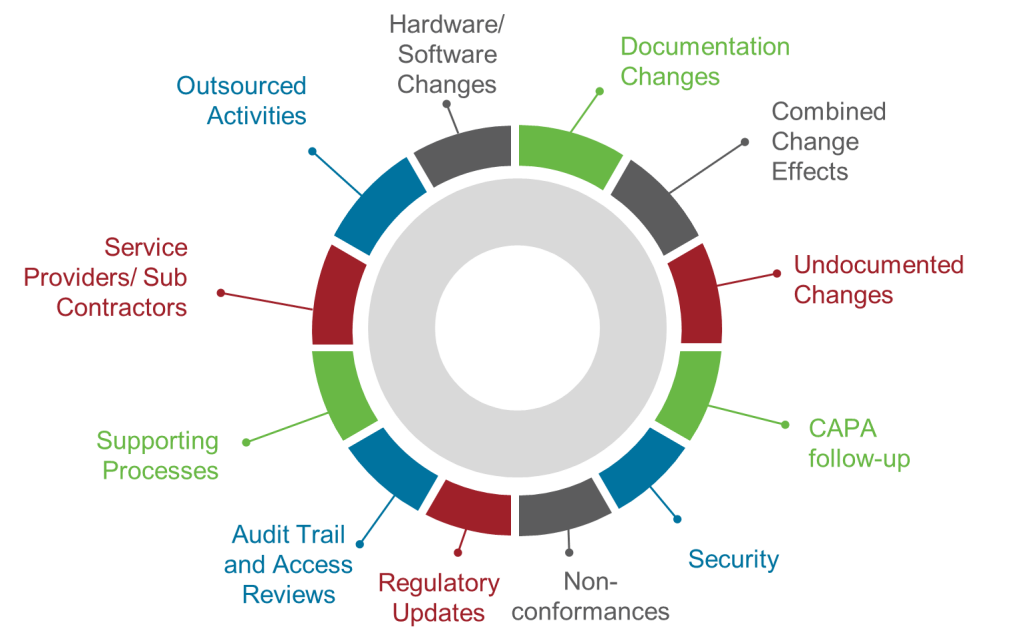

- Change Management: System quality degrades over time without effective change control processes that ensure modifications maintain validated status. This requires ongoing attention regardless of initial validation approach.

- User Competence: Even perfectly validated systems fail if users lack adequate training, motivation, or procedural guidance. Human factors often determine system effectiveness more than technical validation.

- Operational Environment: Systems must be maintained within their designed operational parameters—appropriate hardware, network infrastructure, security controls, and environmental conditions. Environmental failures can compromise even well-validated systems.

These factors have driven system quality throughout the history of computer system validation and will continue to do so regardless of methodological labels. CSA doesn’t address any of these fundamental quality drivers differently than GAMP-based approaches—it simply rebrands existing practices with contemporary terminology.

The Economics of Validation: Why Efficiency Matters

One area where CSA advocates make legitimate points involves the economics of validation practice. Poor validation implementations can indeed create excessive costs and time delays that provide minimal risk reduction benefit. However, these problems result from poor implementation, not inherent methodological limitations.

Effective validation programs have always balanced several economic considerations:

- Resource Allocation: Validation effort should be concentrated on systems with the highest risk and business impact. Organizations that validate all systems identically are misapplying GAMP principles, not following them.

- Documentation Efficiency: Validation documentation should support business objectives rather than existing for its own sake. Excessive documentation often results from misunderstanding regulatory requirements rather than regulatory over-reach.

- Testing Effectiveness: Validation testing should build confidence in system performance rather than simply following predetermined scripts. Effective testing combines scripted protocols with exploratory testing, automated validation, and ongoing monitoring.

- Lifecycle Economics: The total cost of validation includes initial validation plus ongoing maintenance throughout the system lifecycle. Front-end investment in robust validation often reduces long-term operational costs.

- Opportunity Cost: Resources invested in validation could be applied to other quality improvements. Effective validation programs consider these opportunity costs and optimize overall quality outcomes.

These economic principles aren’t CSA innovations—they’re basic project management applied to validation activities. Organizations experiencing validation inefficiencies typically suffer from poor implementation of established practices rather than inadequate methodological guidance.

The Agile Development Challenge: Old Wine in New Bottles

One area where CSA advocates claim particular expertise involves validating systems developed using agile methodologies, continuous integration/continuous deployment (CI/CD), and other modern software development approaches. This represents a more legitimate consulting opportunity because these development methods do create genuine challenges for traditional validation approaches.

However, the validation industry’s response to agile development demonstrates both the adaptability of existing frameworks and the consulting industry’s tendency to oversell new approaches as revolutionary breakthroughs.

GAMP 5 second edition, published in 2022, explicitly addresses agile development challenges and provides guidance for validating systems developed using modern methodologies. The core principles remain unchanged—validation should provide confidence that systems are fit for their intended use—but the implementation approaches adapt to different development lifecycles.

Key adaptations for agile development include:

- Iterative Validation: Rather than conducting validation at the end of development, validation activities occur throughout each development sprint, allowing for earlier defect detection and correction.

- Automated Testing Integration: Automated testing tools become part of the validation approach rather than separate activities, leveraging the automated testing that agile development teams already implement.

- Risk-Based Prioritization: User stories and system features are prioritized based on risk assessment, ensuring that high-risk functionality receives appropriate validation attention.

- Continuous Documentation: Documentation evolves continuously rather than being produced as discrete deliverables, aligning with agile documentation principles.

- Supplier Collaboration: Validation activities are integrated with supplier development processes rather than conducted independently, leveraging the transparency that agile methods provide.

These adaptations represent evolutionary improvements, often slight, in validation practice rather than revolutionary breakthroughs. They address genuine challenges created by modern development methods while maintaining the fundamental goal of ensuring system fitness for intended use.

The Cloud Computing Reality: Infrastructure Versus Application

Another area where CSA advocates claim particular relevance involves cloud-based systems and Software as a Service (SaaS) applications. This represents a more legitimate area of methodological development because cloud computing does create genuine differences in validation approach compared to traditional on-premises systems.

However, the core validation challenges remain unchanged: organizations must ensure that cloud-based systems are fit for their intended use, maintain data integrity, and comply with applicable regulations. The differences lie in implementation details rather than fundamental principles.

Key considerations for cloud-based system validation include:

- Shared Responsibility Models: Cloud providers and customers share responsibility for different aspects of system security and compliance. Validation approaches must clearly delineate these responsibilities and ensure appropriate controls at each level.

- Supplier Assessment: Cloud providers require more extensive assessment than traditional software suppliers because they control critical infrastructure components that customers cannot directly inspect.

- Data Residency and Transfer: Cloud systems often involve data transfer across geographic boundaries and storage in multiple locations. Validation must address these data handling practices and their regulatory implications.

- Service Level Agreements: Cloud services operate under different availability and performance models than on-premises systems. Validation approaches must adapt to these service models.

- Continuous Updates: Cloud providers often update their services more frequently than traditional software suppliers. Change control processes must adapt to this continuous update model.

These considerations require adaptation of validation practices but don’t invalidate existing principles. Organizations can validate cloud-based systems using GAMP principles with appropriate modification for cloud-specific characteristics. CSA doesn’t provide fundamentally different guidance—it repackages existing adaptation strategies with cloud-specific terminology.

The Data Integrity Connection: Where Real Innovation Occurs

One area where legitimate innovation has occurred in pharmaceutical quality involves data integrity practices and their integration with computer system validation. The FDA’s data integrity guidance documents, EU data integrity guidelines, and industry best practices have evolved significantly over the past decade, creating genuine opportunities for improved validation approaches.

However, this evolution represents refinement of existing principles rather than replacement of established practices. Data integrity concepts build directly on computer system validation foundations:

- ALCOA+ Principles: Attributable, Legible, Contemporaneous, Original, Accurate data requirements, plus Complete, Consistent, Enduring, and Available requirements, extend traditional validation concepts to address specific data handling challenges.

- Audit Trail Requirements: Enhanced audit trail capabilities build on existing Part 11 requirements while addressing modern data manipulation risks.

- System Access Controls: Improved user authentication and authorization extend traditional computer system security while addressing contemporary threats.

- Data Lifecycle Management: Systematic approaches to data creation, processing, review, retention, and destruction integrate with existing system lifecycle management.

- Risk-Based Data Review: Proportionate data review approaches apply risk-based thinking to data integrity challenges.

These developments represent genuine improvements in validation practice that address real regulatory and business challenges. They demonstrate how existing frameworks can evolve to address new challenges without requiring wholesale replacement of established approaches.

The Training and Competence Reality: Where Change Actually Matters

Perhaps the area where CSA advocates make the most legitimate points involves training and competence development for validation professionals. Traditional validation training has often focused on procedural compliance rather than risk-based thinking, creating practitioners who can follow protocols but struggle with complex risk assessment and decision-making.

This competence gap creates real problems in validation practice:

- Protocol-Following Over Problem-Solving: Validation professionals trained primarily in procedural compliance may miss system risks that don’t fit predetermined testing categories.

- Documentation Focus Over Quality Focus: Emphasis on documentation completeness can obscure the underlying goal of ensuring system fitness for intended use.

- Risk Assessment Limitations: Many validation professionals lack the technical depth needed for effective risk assessment of complex modern systems.

- Regulatory Interpretation Challenges: Understanding the intent behind regulatory requirements rather than just their literal text requires experience and training that many practitioners lack.

- Technology Evolution: Rapid changes in information technology create knowledge gaps for validation professionals trained primarily on traditional systems.

These competence challenges represent genuine opportunities for improvement in validation practice. However, they result from inadequate implementation of existing approaches rather than flaws in the approaches themselves. GAMP has always emphasized risk-based thinking and proportionate validation—the problem lies in how practitioners are trained and supported, not in the methodological framework.

Effective responses to these competence challenges include:

- Risk-Based Training: Education programs that emphasize risk assessment and critical thinking rather than procedural compliance.

- Technical Depth Development: Training that builds understanding of information technology principles rather than just validation procedures.

- Regulatory Context Education: Programs that help practitioners understand the regulatory intent behind validation requirements.

- Scenario-Based Learning: Training that uses complex, real-world scenarios rather than simplified examples.

- Continuous Learning Programs: Ongoing education that addresses technology evolution and regulatory changes.

These improvements can be implemented within existing GAMP frameworks without requiring adoption of any ‘new’ paradigm. They address real professional development needs while building on established validation principles.

The Measurement Challenge: How Do We Know What Works?

One of the most frustrating aspects of the CSA versus CSV debate is the lack of empirical evidence supporting claims of CSA superiority. Validation effectiveness ultimately depends on measurable outcomes: system reliability, regulatory compliance, cost efficiency, and business enablement. However, CSA advocates rarely present comparative data demonstrating improved outcomes.

Meaningful validation metrics might include:

- System Reliability: Frequency of system failures, time to resolution, and impact on business operations provide direct measures of validation effectiveness.

- Regulatory Compliance: Inspection findings, regulatory citations, and compliance costs indicate how well validation approaches meet regulatory expectations.

- Cost Efficiency: Total cost of ownership including initial validation, ongoing maintenance, and change control activities reflects economic effectiveness.

- Time to Implementation: Speed of system deployment while maintaining appropriate quality controls indicates process efficiency.

- User Satisfaction: System usability, training effectiveness, and user adoption rates reflect practical validation outcomes.

- Change Management Effectiveness: Success rate of system changes, time required for change implementation, and change-related defects indicate validation program maturity.

Without comparative data on these metrics, claims of CSA superiority remain unsupported marketing assertions. Organizations considering CSA adoption should demand empirical evidence of improved outcomes rather than accepting theoretical arguments about methodological superiority.

The Global Regulatory Perspective: Why Consistency Matters

The pharmaceutical industry operates in a global regulatory environment where consistency across jurisdictions provides significant business value. Validation approaches that work effectively across multiple regulatory frameworks reduce compliance costs and enable efficient global operations.

GAMP-based validation approaches have demonstrated this global effectiveness through widespread adoption across major pharmaceutical markets:

- FDA Acceptance: GAMP principles align with FDA computer system validation expectations and have been successfully applied in thousands of FDA-regulated facilities.

- EMA/European Union Compatibility: GAMP approaches satisfy EU GMP requirements including Annex 11 and have been widely implemented across European pharmaceutical operations.

- Other Regulatory Bodies: GAMP principles are compatible with Health Canada, TGA (Australia), PMDA (Japan), and other regulatory frameworks, enabling consistent global implementation.

- Industry Standards Integration: GAMP integrates effectively with ISO standards, ICH guidelines, and other international frameworks that pharmaceutical companies must address.

This global consistency represents a significant competitive advantage for established validation approaches. CSA, despite alignment with FDA thinking, has not demonstrated equivalent acceptance across other regulatory frameworks. Organizations adopting CSA risk creating validation approaches that work well in FDA-regulated environments but require modification for other jurisdictions.

The regulatory convergence demonstrated by the draft Annex 11 revision suggests that global harmonization is occurring around established risk-based validation principles rather than newer CSA concepts. This convergence validates existing approaches rather than supporting wholesale methodological change.

The Practical Implementation Reality: What Actually Happens

Beyond the methodological debates and consulting industry marketing lies the practical reality of how validation programs actually function in pharmaceutical organizations. This reality demonstrates why existing GAMP-based approaches remain effective and why CSA adoption often creates more problems than it solves.

Successful validation programs, regardless of methodological label, share several common characteristics:

- Senior Leadership Support: Validation programs succeed when senior management understands their business value and provides appropriate resources.

- Cross-Functional Integration: Effective validation requires collaboration between quality assurance, information technology, operations, and regulatory affairs functions.

- Appropriate Resource Allocation: Validation programs must be staffed with competent professionals and provided with adequate tools and budget.

- lear Procedural Guidance: Staff need clear, practical procedures that explain how to apply validation principles to specific situations.

- Ongoing Training and Development: Validation effectiveness depends on continuous learning and competence development.

- Metrics and Continuous Improvement: Programs must measure their effectiveness and adapt based on performance data.

These success factors operate independently of methodological labels.

The practical implementation reality also reveals why consulting industry solutions often fail to deliver promised benefits. Consultants typically focus on methodological frameworks and documentation rather than the organizational factors that actually drive validation effectiveness. A organization with poor cross-functional collaboration, inadequate resources, and weak senior management support won’t solve these problems by adopting some consultants version of CSA—they need fundamental improvements in how they approach validation as a business function.

The Future of Validation: Evolution, Not Revolution

Looking ahead, computer system validation will continue to evolve in response to technological change, regulatory development, and business needs. However, this evolution will likely occur within existing frameworks rather than through wholesale replacement of established approaches.

Several trends will shape validation practice over the coming decade:

- Increased Automation: Automated testing tools, artificial intelligence applications, and machine learning capabilities will become more prevalent in validation practice, but they will augment rather than replace human judgment.

- Cloud and SaaS Integration: Cloud computing and Software as a Service applications will require continued adaptation of validation approaches, but these adaptations will build on existing risk-based principles.

- Data Analytics Integration: Advanced analytics capabilities will provide new insights into system performance and risk patterns, enabling more sophisticated validation approaches.

- Regulatory Harmonization: Continued convergence of global regulatory approaches will simplify validation for multinational organizations.

- Agile and DevOps Integration: Modern software development methodologies will require continued adaptation of validation practices, but the fundamental goals remain unchanged.

These trends represent evolutionary development rather than revolutionary change. They will require validation professionals to develop new technical competencies and adapt established practices to new contexts, but they don’t invalidate the fundamental principles that have guided effective validation for decades.

Organizations preparing for these future challenges will be best served by building strong foundational capabilities in risk assessment, technical understanding, and adaptability rather than adopting particular methodological labels. The ability to apply established validation principles to new challenges will prove more valuable than expertise in any specific framework or approach.

The Emperor’s New Validation Clothes

Computer System Assurance represents a textbook case of how the pharmaceutical consulting industry creates artificial innovation by rebranding established practices as revolutionary breakthroughs. Every principle that CSA advocates present as innovative thinking has been embedded in risk-based validation approaches, GAMP guidance, and regulatory expectations for over two decades.

The fundamental question is not whether CSA principles are sound—they generally are, because they restate established best practices. The question is whether the pharmaceutical industry benefits from treating existing practices as obsolete and investing resources in “modernization” projects that deliver minimal incremental value.

The answer should be clear to any quality professional who has implemented effective validation programs: we don’t need CSA to tell us to think critically about validation challenges, apply risk-based approaches to system assessment, or leverage supplier documentation effectively. We’ve been doing these things successfully for years using GAMP principles and established regulatory guidance.

What we do need is better implementation of existing approaches—more competent practitioners, stronger organizational support, clearer procedural guidance, and continuous improvement based on measurable outcomes. These improvements can be achieved within established frameworks without expensive consulting engagements or wholesale methodological change.

The computer system assurance emperor has no clothes—underneath the contemporary terminology and marketing sophistication lies the same risk-based, lifecycle-oriented, supplier-leveraging validation approach that mature organizations have been implementing successfully for over a decade. Quality professionals should focus their attention on implementation excellence rather than methodological fashion, building validation programs that deliver demonstrable business value regardless of what acronym appears on the procedure titles.

The choice facing pharmaceutical organizations is not between outdated CSV and modern CSA—it’s between poor implementation of established practices and excellent implementation of the same practices. Excellence is what protects patients, ensures product quality, and satisfies regulatory expectations. Everything else is just consulting industry marketing.