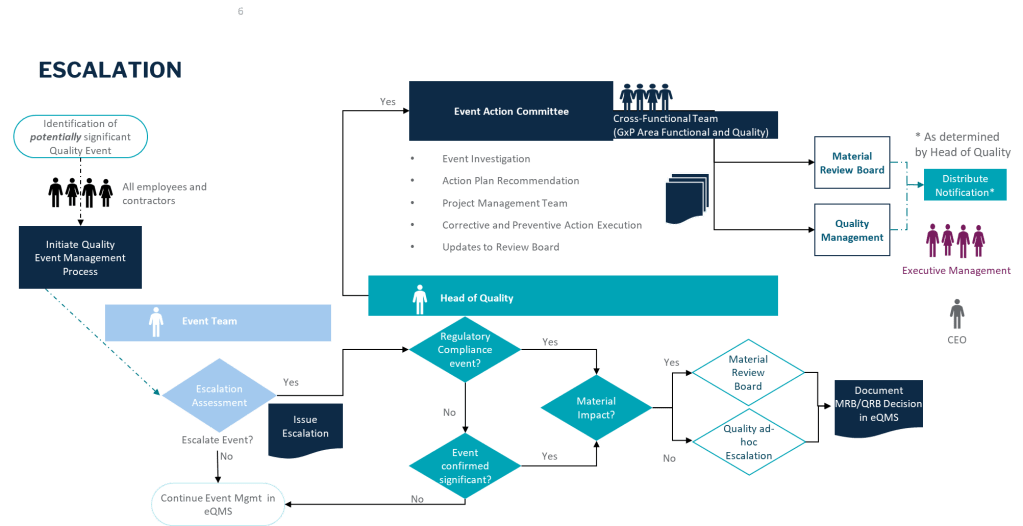

Quality escalation is a critical process in maintaining the integrity of products, particularly in industries governed by Good Practices (GxP) such as pharmaceuticals and biotechnology. Effective escalation ensures that issues are addressed promptly, preventing potential risks to product quality and patient safety. This blog post will explore best practices for quality escalation, focusing on GxP compliance and the implications for regulatory notifications.

Understanding Quality Escalation

Quality escalation involves raising unresolved issues to higher management levels for timely resolution. This process is essential in environments where compliance with GxP regulations is mandatory. The primary goal is to ensure that products are manufactured, tested, and distributed in a manner that maintains their quality and safety.

This is a requirement across all the regulations, including clinical. ICH E6(r3) emphasizes the importance of effective monitoring and oversight to ensure that clinical trials are conducted in compliance with GCP and regulatory requirements. This includes identifying and addressing issues promptly.

Key Triggers for Escalation

Identifying triggers for escalation is crucial. Common triggers include:

- Regulatory Compliance Issues: Non-compliance with regulatory requirements can lead to product quality issues and necessitate escalation.

- Quality Control Failures: Failures in quality control processes, such as testing or inspection, can impact product safety and quality.

- Data Integrity: Significant concerns and failures in quality of data.

- Supply Chain Disruptions: Disruptions in the supply chain can affect the availability of critical components or materials, potentially impacting product quality.

- Patient Safety Concerns: Any issues related to patient safety, such as adverse events or potential safety risks, should be escalated immediately.

| Escalation Criteria | Examples of Quality Events for Escalation |

| Potential to adversely affect quality, safety, efficacy, performance or compliance of product (commercial or clinical) | •Contamination (product, raw material, equipment, micro; environmental) •Product defect/deviation from process parameters or specification (on file with agencies, e.g. CQAs and CPPs) •Significant GMP deviations •Incorrect/deficient labeling •Product complaints (significant PC, trends in PCs) •OOS/OOT (e.g.; stability) |

| Product counterfeiting, tampering, theft | •Product counterfeiting, tampering, theft reportable to Health Authority (HA) •Lost/stolen IMP •Fraud or misconduct associated with counterfeiting, tampering, theft •Potential to impact product supply (e.g.; removal, correction, recall) |

| Product shortage likely to disrupt patient care and/or reportable to HA | •Disruption of product supply due to product quality events, natural disasters (business continuity disruption), OOS impact, capacity constraints |

| Potential to cause patient harm associated with a product quality event | •Urgent Safety Measure, Serious Breach, Significant Product Compliant, Safety Signal that are determined associated with a product quality event |

| Significant GMP non-compliance/event | •Non-compliance or non-conformance event with potential to impact product performance meeting specification, safety efficacy or regulatory requirements |

| Regulatory Compliance Event | •Significant (critical, repeat) regulatory inspection findings; lack of commitment adherence •Notification of directed/for cause inspection •Notification of Health Authority correspondence indicating potential regulatory action |

Best Practices for Quality Escalation

- Proactive Identification: Encourage a culture where team members proactively identify potential issues. Early detection can prevent minor problems from escalating into major crises.

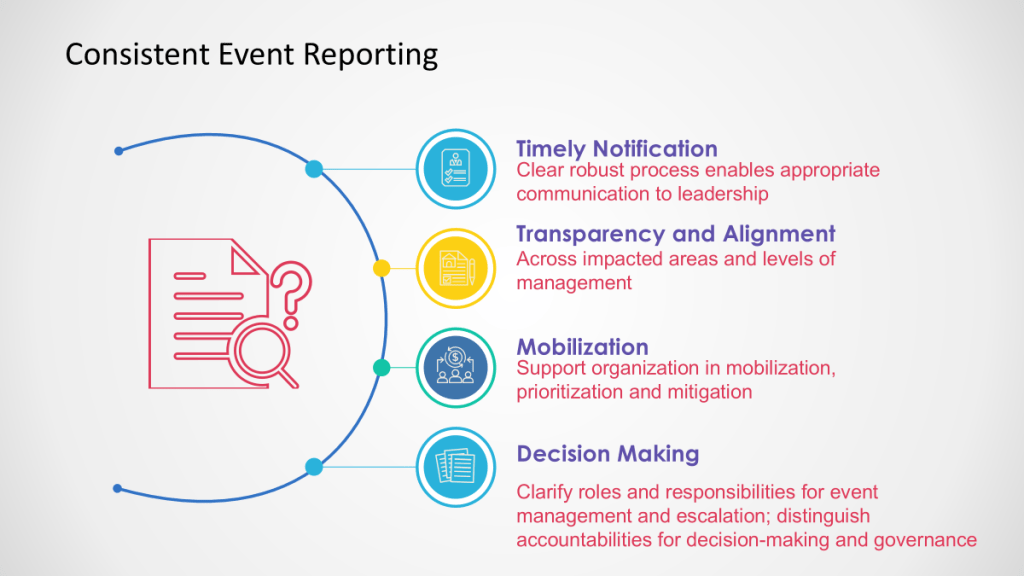

- Clear Communication Channels: Establish clear communication channels and protocols for escalating issues. This ensures that the right people are informed promptly and can take appropriate action.

- Documentation and Tracking: Use a central repository to document and track issues. This helps in identifying trends, implementing corrective actions, and ensuring compliance with regulatory requirements.

- Collaborative Resolution: Foster collaboration between different departments and stakeholders to resolve issues efficiently. This includes involving quality assurance, quality control, and regulatory affairs teams as necessary.

- Regulatory Awareness: Be aware of regulatory requirements and ensure that all escalations are handled in a manner that complies with these regulations. This includes maintaining confidentiality when necessary and ensuring transparency with regulatory bodies.

GxP Impact and Regulatory Notifications

In industries governed by GxP, any significant quality issues may require notification to regulatory bodies. This includes situations where product quality or patient safety is compromised. Best practices for handling such scenarios include:

- Prompt Notification: Notify regulatory bodies promptly if there is a risk to public health or if regulatory requirements are not met.

- Comprehensive Reporting: Ensure that all reports to regulatory bodies are comprehensive, including details of the issue, actions taken, and corrective measures implemented.

- Continuous Improvement: Use escalations as opportunities to improve processes and prevent future occurrences. This includes conducting root cause analyses and implementing preventive actions.

Fit with Quality Management Review

This fits within the Quality Management Review band, being an ad hoc triggered review of significant issues, ensuring appropriate leadership attention, and allowing key decisions to be made in a timely manner.

Conclusion

Quality escalation is a vital component of maintaining product quality and ensuring patient safety in GxP environments. By implementing best practices such as proactive issue identification, clear communication, and collaborative resolution, organizations can effectively manage risks and comply with regulatory requirements. Understanding when and how to escalate issues is crucial for preventing potential crises and ensuring that products meet the highest standards of quality and safety.