A rubric is a tool used primarily in educational settings to evaluate and assess student performance. It provides a clear set of criteria and standards that describe varying levels of quality for a specific assignment or task. Rubrics are designed to ensure consistency and objectivity in grading and feedback, making them a valuable resource for both teachers and students.

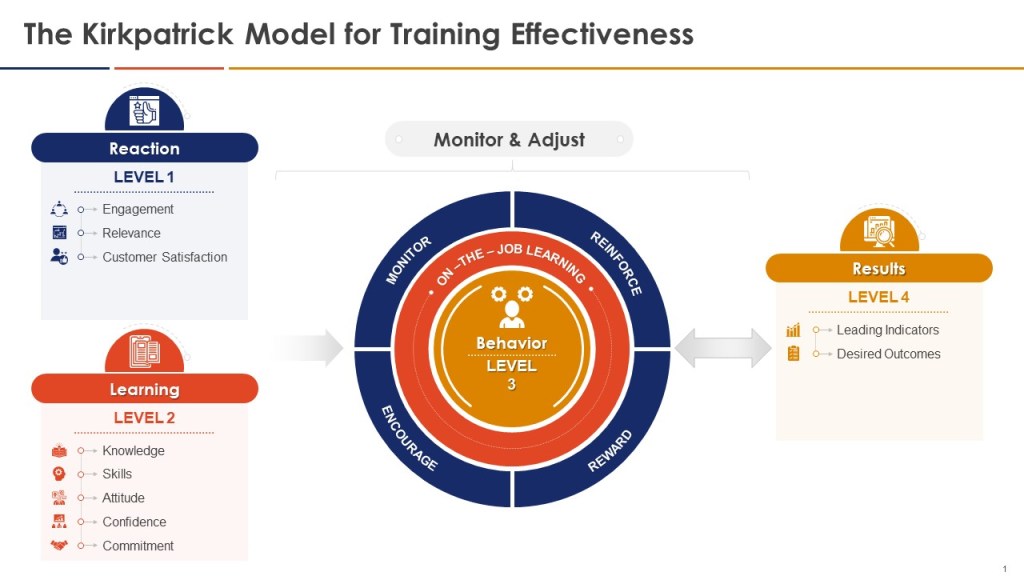

Rubrics are useful in assessing competencies and skills within organizations, providing a structured way to evaluate strengths and weaknesses, which makes them perfect for knowledge based activities to gauge appropriate training and execution. They can really help demonstrate that an outcome is a good one.

Key Features of a Rubric

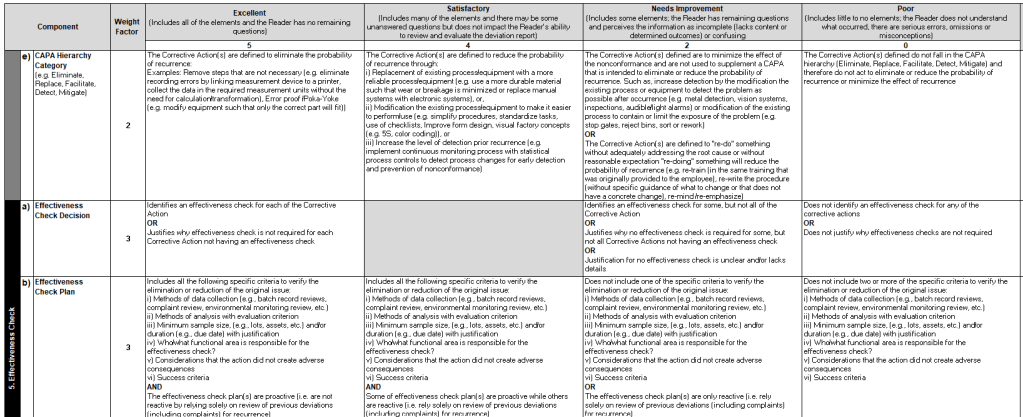

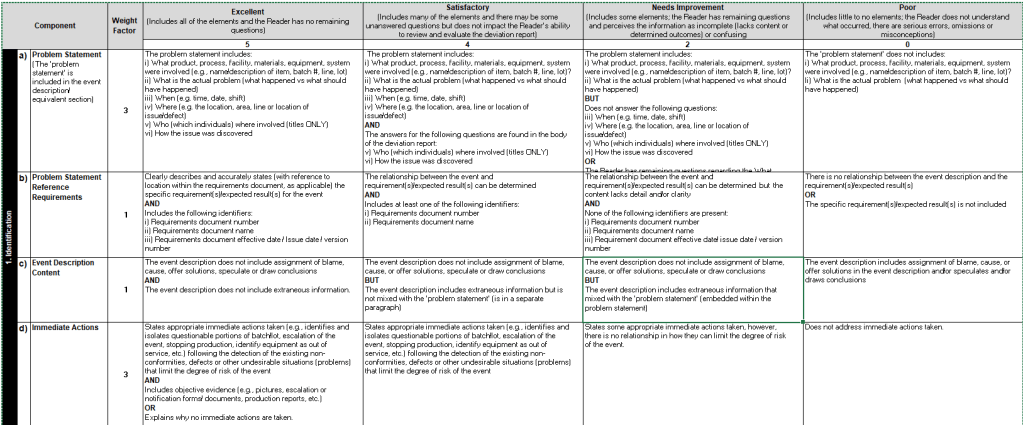

- Criteria: Rubrics list specific criteria that are important for the assignment. These criteria outline what is expected from the intended work, such as clarity, organization, and mechanics in a writing assignment.

- Performance Levels: Rubrics define different levels of achievement for each criterion, often using descriptive language (e.g., excellent, good, needs improvement) or numerical scores (e.g., 4, 3, 2, 1).

- Feedback and Guidance: Rubrics provide detailed feedback, helping individuals understand their strengths and areas for improvement. This feedback can guide executors in revising their work to meet learning objectives more effectively.

Types of Rubrics

- Analytic Rubrics: These break down the assignment into several components, each with its own set of criteria and performance levels. This type provides detailed feedback on specific areas of the work.

- Holistic Rubrics: These assess the work as a whole rather than individual components. They provide a single overall score based on the general quality of the work.

- Single-Point Rubrics: These focus on a single level of performance for each criterion, highlighting areas that meet expectations and those that need improvement.

Benefits of Using Rubrics

- Clarity and Consistency: Rubrics help clarify expectations for students, ensuring they understand what is required to be good. They also promote consistency across activities.

- Self-Assessment: Rubrics encourage individuals to reflect on their own work and understand the standards they need to meet. This can lead to improved learning outcomes as individuals become more aware of their progress and areas needing improvement.

I love rubrics. They are great for all quality systems. They can be used for on-the-job training, for record writing and review, for re-qualifications. By creatin rubrics you define what good looks like by providing a structured and objective framework that improves clarity, consistency, and specificity in evaluations. It holds both the writer and the reviewer accountable.