We live in a fascinating inflection point in quality management, caught between traditional document-centric approaches and the emerging imperative for data-centricity needed to fully realize the potential of digital transformation. For several decades, we’ve been in a process that continues to accelerate through a technology transition that will deliver dramatic improvements in operations and quality. This transformation is driven by three interconnected trends: Pharma 4.0, the Rise of AI, and the shift from Documents to Data.

The History and Evolution of Documents in Quality Management

The history of document management can be traced back to the introduction of the file cabinet in the late 1800s, providing a structured way to organize paper records. Quality management systems have even deeper roots, extending back to medieval Europe when craftsman guilds developed strict guidelines for product inspection. These early approaches established the document as the fundamental unit of quality management—a paradigm that persisted through industrialization and into the modern era.

The document landscape took a dramatic turn in the 1980s with the increasing availability of computer technology. The development of servers allowed organizations to store documents electronically in centralized mainframes, marking the beginning of electronic document management systems (eDMS). Meanwhile, scanners enabled conversion of paper documents to digital format, and the rise of personal computers gave businesses the ability to create and store documents directly in digital form.

In traditional quality systems, documents serve as the backbone of quality operations and fall into three primary categories: functional documents (providing instructions), records (providing evidence), and reports (providing specific information). This document trinity has established our fundamental conception of what a quality system is and how it operates—a conception deeply influenced by the physical limitations of paper.

Breaking the Paper Paradigm: Limitations of Document-Centric Thinking

The Paper-on-Glass Dilemma

The maturation path for quality systems typically progresses mainly from paper execution to paper-on-glass to end-to-end integration and execution. However, most life sciences organizations remain stuck in the paper-on-glass phase of their digital evolution. They still rely on the paper-on-glass data capture method, where digital records are generated that closely resemble the structure and layout of a paper-based workflow. In general, the wider industry is still reluctant to transition away from paper-like records out of process familiarity and uncertainty of regulatory scrutiny.

Paper-on-glass systems present several specific limitations that hamper digital transformation:

- Constrained design flexibility: Data capture is limited by the digital record’s design, which often mimics previous paper formats rather than leveraging digital capabilities. A pharmaceutical batch record system that meticulously replicates its paper predecessor inherently limits the system’s ability to analyze data across batches or integrate with other quality processes.

- Manual data extraction requirements: When data is trapped in digital documents structured like paper forms, it remains difficult to extract. This means data from paper-on-glass records typically requires manual intervention, substantially reducing data utilization effectiveness.

- Elevated error rates: Many paper-on-glass implementations lack sufficient logic and controls to prevent avoidable data capture errors that would be eliminated in truly digital systems. Without data validation rules built into the capture process, quality systems continue to allow errors that must be caught through manual review.

- Unnecessary artifacts: These approaches generate records with inflated sizes and unnecessary elements, such as cover pages that serve no functional purpose in a digital environment but persist because they were needed in paper systems.

- Cumbersome validation: Content must be fully controlled and managed manually, with none of the advantages gained from data-centric validation approaches.

Broader Digital Transformation Struggles

Pharmaceutical and medical device companies must navigate complex regulatory requirements while implementing new digital systems, leading to stalling initiatives. Regulatory agencies have historically relied on document-based submissions and evidence, reinforcing document-centric mindsets even as technology evolves.

Beyond Paper-on-Glass: What Comes Next?

What comes after paper-on-glass? The natural evolution leads to end-to-end integration and execution systems that transcend document limitations and focus on data as the primary asset. This evolution isn’t merely about eliminating paper—it’s about reconceptualizing how we think about the information that drives quality management.

In fully integrated execution systems, functional documents and records become unified. Instead of having separate systems for managing SOPs and for capturing execution data, these systems bring process definitions and execution together. This approach drives up reliability and drives out error, but requires fundamentally different thinking about how we structure information.

A prime example of moving beyond paper-on-glass can be seen in advanced Manufacturing Execution Systems (MES) for pharmaceutical production. Rather than simply digitizing batch records, modern MES platforms incorporate AI, IIoT, and Pharma 4.0 principles to provide the right data, at the right time, to the right team. These systems deliver meaningful and actionable information, moving from merely connecting devices to optimizing manufacturing and quality processes.

AI-Powered Documentation: Breaking Through with Intelligent Systems

A dramatic example of breaking free from document constraints comes from Novo Nordisk’s use of AI to draft clinical study reports. The company has taken a leap forward in pharmaceutical documentation, putting AI to work where human writers once toiled for weeks. The Danish pharmaceutical company is using Claude, an AI model by Anthropic, to draft clinical study reports—documents that can stretch hundreds of pages.

This represents a fundamental shift in how we think about documents. Rather than having humans arrange data into documents manually, we can now use AI to generate high-quality documents directly from structured data sources. The document becomes an output—a view of the underlying data—rather than the primary artifact of the quality system.

Data Requirements: The Foundation of Modern Quality Systems in Life Sciences

Shifting from document-centric to data-centric thinking requires understanding that documents are merely vessels for data—and it’s the data that delivers value. When we focus on data requirements instead of document types, we unlock new possibilities for quality management in regulated environments.

At its core, any quality process is a way to realize a set of requirements. These requirements come from external sources (regulations, standards) and internal needs (efficiency, business objectives). Meeting these requirements involves integrating people, procedures, principles, and technology. By focusing on the underlying data requirements rather than the documents that traditionally housed them, life sciences organizations can create more flexible, responsive quality systems.

ICH Q9(R1) emphasizes that knowledge is fundamental to effective risk management, stating that “QRM is part of building knowledge and understanding risk scenarios, so that appropriate risk control can be decided upon for use during the commercial manufacturing phase.” We need to recognize the inverse relationship between knowledge and uncertainty in risk assessment. As ICH Q9(R1) notes, uncertainty may be reduced “via effective knowledge management, which enables accumulated and new information (both internal and external) to be used to support risk-based decisions throughout the product lifecycle.”

This approach helps us ensure that our tools take into account that our processes are living and breathing, our tools should take that into account. This is all about moving to a process repository and away from a document mindset.

Documents as Data Views: Transforming Quality System Architecture

When we shift our paradigm to view documents as outputs of data rather than primary artifacts, we fundamentally transform how quality systems operate. This perspective enables a more dynamic, interconnected approach to quality management that transcends the limitations of traditional document-centric systems.

Breaking the Document-Data Paradigm

Traditionally, life sciences organizations have thought of documents as containers that hold data. This subtle but profound perspective has shaped how we design quality systems, leading to siloed applications and fragmented information. When we invert this relationship—seeing data as the foundation and documents as configurable views of that data—we unlock powerful capabilities that better serve the needs of modern life sciences organizations.

The Benefits of Data-First, Document-Second Architecture

When documents become outputs—dynamic views of underlying data—rather than the primary focus of quality systems, several transformative benefits emerge.

First, data becomes reusable across multiple contexts. The same underlying data can generate different documents for different audiences or purposes without duplication or inconsistency. For example, clinical trial data might generate regulatory submission documents, internal analysis reports, and patient communications—all from a single source of truth.

Second, changes to data automatically propagate to all relevant documents. In a document-first system, updating information requires manually changing each affected document, creating opportunities for errors and inconsistencies. In a data-first system, updating the central data repository automatically refreshes all document views, ensuring consistency across the quality ecosystem.

Third, this approach enables more sophisticated analytics and insights. When data exists independently of documents, it can be more easily aggregated, analyzed, and visualized across processes.

In this architecture, quality management systems must be designed with robust data models at their core, with document generation capabilities built on top. This might include:

- A unified data layer that captures all quality-related information

- Flexible document templates that can be populated with data from this layer

- Dynamic relationships between data entities that reflect real-world connections between quality processes

- Powerful query capabilities that enable users to create custom views of data based on specific needs

The resulting system treats documents as what they truly are: snapshots of data formatted for human consumption at specific moments in time, rather than the authoritative system of record.

Electronic Quality Management Systems (eQMS): Beyond Paper-on-Glass

Electronic Quality Management Systems have been adopted widely across life sciences, but many implementations fail to realize their full potential due to document-centric thinking. When implementing an eQMS, organizations often attempt to replicate their existing document-based processes in digital form rather than reconceptualizing their approach around data.

Current Limitations of eQMS Implementations

Document-centric eQMS systems treat functional documents as discrete objects, much as they were conceived decades ago. They still think it terms of SOPs being discrete documents. They structure workflows, such as non-conformances, CAPAs, change controls, and design controls, with artificial gaps between these interconnected processes. When a manufacturing non-conformance impacts a design control, which then requires a change control, the connections between these events often remain manual and error-prone.

This approach leads to compartmentalized technology solutions. Organizations believe they can solve quality challenges through single applications: an eQMS will solve problems in quality events, a LIMS for the lab, an MES for manufacturing. These isolated systems may digitize documents but fail to integrate the underlying data.

Data-Centric eQMS Approaches

We are in the process of reimagining eQMS as data platforms rather than document repositories. A data-centric eQMS connects quality events, training records, change controls, and other quality processes through a unified data model. This approach enables more effective risk management, root cause analysis, and continuous improvement.

For instance, when a deviation is recorded in a data-centric system, it automatically connects to relevant product specifications, equipment records, training data, and previous similar events. This comprehensive view enables more effective investigation and corrective action than reviewing isolated documents.

Looking ahead, AI-powered eQMS solutions will increasingly incorporate predictive analytics to identify potential quality issues before they occur. By analyzing patterns in historical quality data, these systems can alert quality teams to emerging risks and recommend preventive actions.

Manufacturing Execution Systems (MES): Breaking Down Production Data Silos

Manufacturing Execution Systems face similar challenges in breaking away from document-centric paradigms. Common MES implementation challenges highlight the limitations of traditional approaches and the potential benefits of data-centric thinking.

MES in the Pharmaceutical Industry

Manufacturing Execution Systems (MES) aggregate a number of the technologies deployed at the MOM level. MES as a technology has been successfully deployed within the pharmaceutical industry and the technology associated with MES has matured positively and is fast becoming a recognized best practice across all life science regulated industries. This is borne out by the fact that green-field manufacturing sites are starting with an MES in place—paperless manufacturing from day one.

The amount of IT applied to an MES project is dependent on business needs. At a minimum, an MES should strive to replace paper batch records with an Electronic Batch Record (EBR). Other functionality that can be applied includes automated material weighing and dispensing, and integration to ERP systems; therefore, helping the optimization of inventory levels and production planning.

Beyond Paper-on-Glass in Manufacturing

In pharmaceutical manufacturing, paper batch records have traditionally documented each step of the production process. Early electronic batch record systems simply digitized these paper forms, creating “paper-on-glass” implementations that failed to leverage the full potential of digital technology.

Advanced Manufacturing Execution Systems are moving beyond this limitation by focusing on data rather than documents. Rather than digitizing batch records, these systems capture manufacturing data directly, using sensors, automated equipment, and operator inputs. This approach enables real-time monitoring, statistical process control, and predictive quality management.

An example of a modern MES solution fully compliant with Pharma 4.0 principles is the Tempo platform developed by Apprentice. It is a complete manufacturing system designed for life sciences companies that leverages cloud technology to provide real-time visibility and control over production processes. The platform combines MES, EBR, LES (Laboratory Execution System), and AR (Augmented Reality) capabilities to create a comprehensive solution that supports complex manufacturing workflows.

Electronic Validation Management Systems (eVMS): Transforming Validation Practices

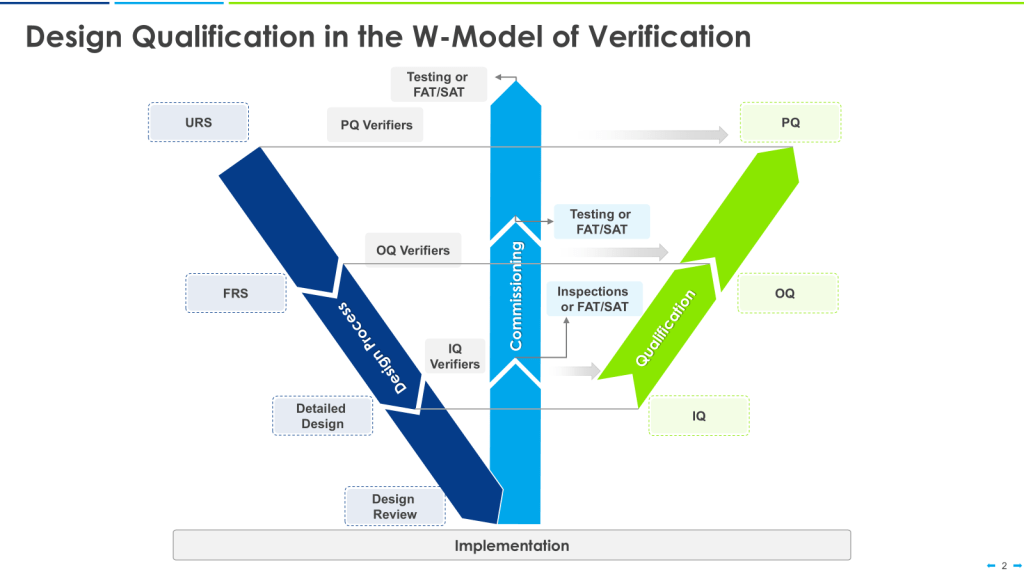

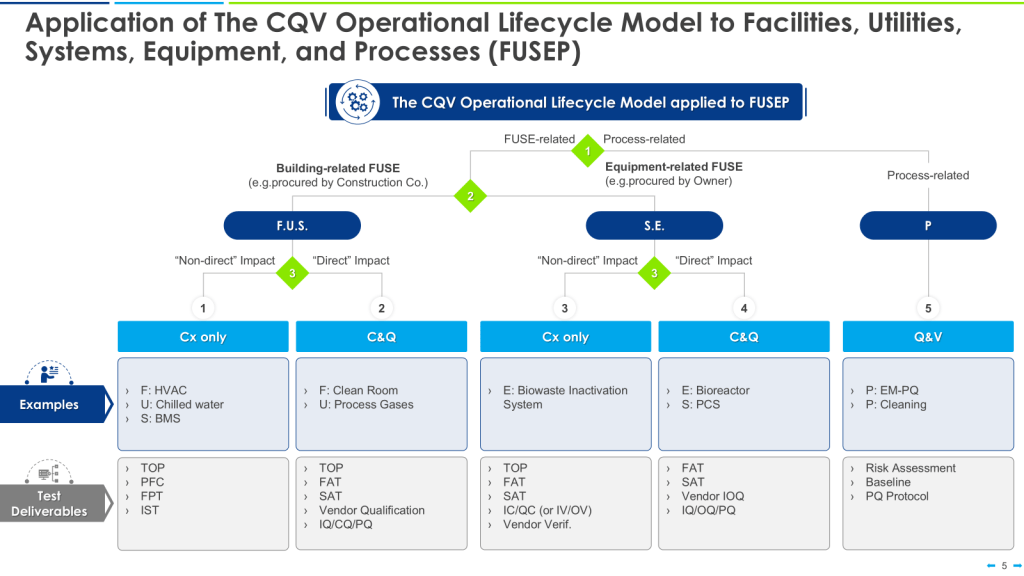

Validation represents a critical intersection of quality management and compliance in life sciences. The transition from document-centric to data-centric approaches is particularly challenging—and potentially rewarding—in this domain.

Current Validation Challenges

Traditional validation approaches face several limitations that highlight the problems with document-centric thinking:

- Integration Issues: Many Digital Validation Tools (DVTs) remain isolated from Enterprise Document Management Systems (eDMS). The eDMS system is typically the first step where vendor engineering data is imported into a client system. However, this data is rarely validated once—typically departments repeat this validation step multiple times, creating unnecessary duplication.

- Validation for AI Systems: Traditional validation approaches are inadequate for AI-enabled systems. Traditional validation processes are geared towards demonstrating that products and processes will always achieve expected results. However, in the digital “intellectual” eQMS world, organizations will, at some point, experience the unexpected.

- Continuous Compliance: A significant challenge is remaining in compliance continuously during any digital eQMS-initiated change because digital systems can update frequently and quickly. This rapid pace of change conflicts with traditional validation approaches that assume relative stability in systems once validated.

Data-Centric Validation Solutions

Modern electronic Validation Management Systems (eVMS) solutions exemplify the shift toward data-centric validation management. These platforms introduce AI capabilities that provide intelligent insights across validation activities to unlock unprecedented operational efficiency. Their risk-based approach promotes critical thinking, automates assurance activities, and fosters deeper regulatory alignment.

We need to strive to leverage the digitization and automation of pharmaceutical manufacturing to link real-time data with both the quality risk management system and control strategies. This connection enables continuous visibility into whether processes are in a state of control.

The 11 Axes of Quality 4.0

LNS Research has identified 11 key components or “axes” of the Quality 4.0 framework that organizations must understand to successfully implement modern quality management:

- Data: In the quality sphere, data has always been vital for improvement. However, most organizations still face lags in data collection, analysis, and decision-making processes. Quality 4.0 focuses on rapid, structured collection of data from various sources to enable informed and agile decision-making.

- Analytics: Traditional quality metrics are primarily descriptive. Quality 4.0 enhances these with predictive and prescriptive analytics that can anticipate quality issues before they occur and recommend optimal actions.

- Connectivity: Quality 4.0 emphasizes the connection between operating technology (OT) used in manufacturing environments and information technology (IT) systems including ERP, eQMS, and PLM. This connectivity enables real-time feedback loops that enhance quality processes.

- Collaboration: Breaking down silos between departments is essential for Quality 4.0. This requires not just technological integration but cultural changes that foster teamwork and shared quality ownership.

- App Development: Quality 4.0 leverages modern application development approaches, including cloud platforms, microservices, and low/no-code solutions to rapidly deploy and update quality applications.

- Scalability: Modern quality systems must scale efficiently across global operations while maintaining consistency and compliance.

- Management Systems: Quality 4.0 integrates with broader management systems to ensure quality is embedded throughout the organization.

- Compliance: While traditional quality focused on meeting minimum requirements, Quality 4.0 takes a risk-based approach to compliance that is more proactive and efficient.

- Culture: Quality 4.0 requires a cultural shift that embraces digital transformation, continuous improvement, and data-driven decision-making.

- Leadership: Executive support and vision are critical for successful Quality 4.0 implementation.

- Competency: New skills and capabilities are needed for Quality 4.0, requiring significant investment in training and workforce development.

The Future of Quality Management in Life Sciences

The evolution from document-centric to data-centric quality management represents a fundamental shift in how life sciences organizations approach quality. While documents will continue to play a role, their purpose and primacy are changing in an increasingly data-driven world.

By focusing on data requirements rather than document types, organizations can build more flexible, responsive, and effective quality systems that truly deliver on the promise of digital transformation. This approach enables life sciences companies to maintain compliance while improving efficiency, enhancing product quality, and ultimately delivering better outcomes for patients.

The journey from documents to data is not merely a technical transition but a strategic evolution that will define quality management for decades to come. As AI, machine learning, and process automation converge with quality management, the organizations that successfully embrace data-centricity will gain significant competitive advantages through improved agility, deeper insights, and more effective compliance in an increasingly complex regulatory landscape.

The paper may go, but the document—reimagined as structured data that enables insight and action—will continue to serve as the foundation of effective quality management. The key is recognizing that documents are vessels for data, and it’s the data that drives value in the organization.