PDCA (and it’s variants) are a pretty tried and true model for process improvement. In the PDCA model a plan is structured in four steps: P (plan) D (do) C (check) A (act). The intention is create a structured cycle that allows the process to flow in accordance with the objectives to be achieved (P), execute what was planned (D), check whether the objectives were achieved with emphasis on the verification of what went right and what went wrong (C) and identify factors of success or failure to feed a new process of planning (A).

Conceptually, the organization will be a fast turning wheel of endlessly learning from mistakes and seeking to maximize processes in order to remain forever in pursuit of strategic objectives, endlessly searching for the maximum efficiency and effectiveness of the system.

The OODA Loop

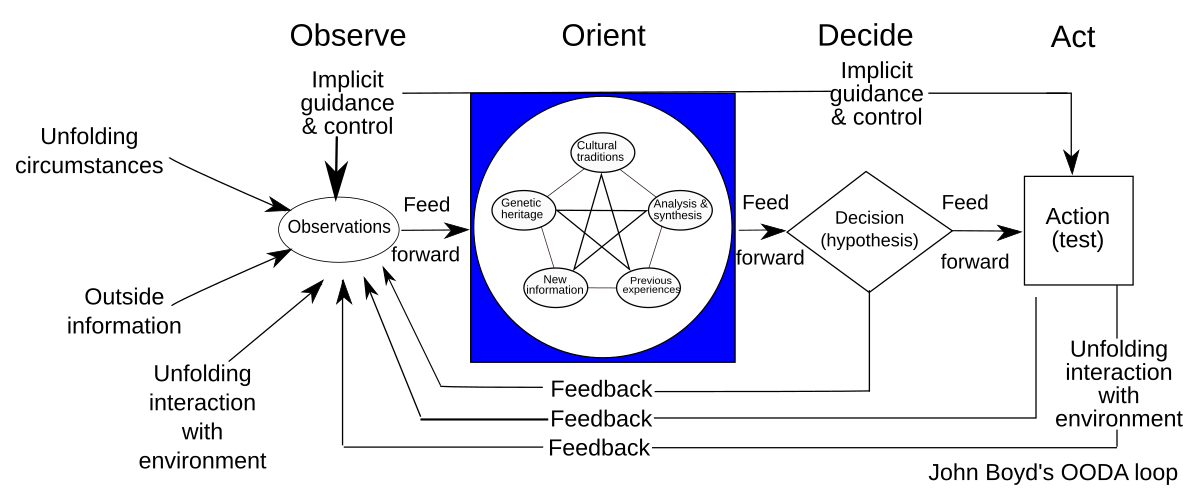

The OODA loop or cycle was designed by John R. Boyd and consists of a cycle of four phases:

Observe, Orient, Decide and Act (OODA).

- Observe: Based on implicit guidance and control, observations are made regarding unfolding circumstances, outside information, and dynamic interaction with the environment (including the result of prior actions).

- Orient: Observations from the prior stage are deconstructed into separate component

pieces; then synthesized and analyzed in several contexts such as cultural traditions, genetic

heritage, and previous experiences; and then combined together for the purposes of

analysis and synthesis to inform the next phase. - Decide: In this phase, hypotheses are evaluated, and a decision is made.

- Act: Based on the decision from the prior stage, action is taken to achieve a desired effect

or result

While similar to the PDCA improvement of a known system making it more effective, efficient or effective (depending on the effect to be expected), the OODA strives to model a framework for situational awareness.

Boyd’s concentration on the specific set of circumstances relevant to military situations had for years meant the OODA loop has not received a lot of wide spread interest. I’ve been seeing a lot of recent adaptations of the OODA loop try to expand to address the needs of operating in volatile, uncertain, complex and ambiguous (VUCA) situations. I especially like seeing it as part of resilience and business continuity.

Enhanced Decision-Making Speed and Agility

The OODA loop enables organizations to make faster, more informed decisions in rapidly changing environments. By continuously cycling through the observe-orient-decide-act process, organizations can respond more quickly to market crises, threats, and emerging opportunities.

Improved Situational Awareness

The observation and orientation phases help organizations maintain a comprehensive understanding of their operating environment. This enhanced situational awareness allows us to identify trends, threats, and opportunities more effectively.

Better Adaptability to Change

The iterative nature of the OODA loop promotes continuous learning and adaptation. This fosters a culture of flexibility and responsiveness, enabling organizations to adjust their strategies and operations as circumstances evolve.

Enhanced Crisis Management

In high-pressure situations or crises, the OODA loop provides a structured approach for rapid, effective decision-making. This can be invaluable for managing unexpected challenges or emergencies.

Improved Team Coordination and Communication

The OODA process encourages clear communication and coordination among team members as they move through each phase. This can lead to better team cohesion and more effective execution of strategies.

Data-Driven Culture

The OODA loop emphasizes the importance of observation and orientation based on current data. This promotes a data-driven culture where decisions are made based on real-time information rather than outdated assumptions.

Continuous Improvement

The cyclical nature of the OODA loop supports ongoing refinement of processes and strategies. Each iteration provides feedback that can be used to improve future observations, orientations, decisions, and actions.

Complementary Perspectives

PDCA is typically used for long-term, systematic improvement projects, while OODA is better suited for rapid decision-making in dynamic environments. Using both allows organizations to address both strategic and tactical needs.

Integration Points

- Observation and Planning

- OODA’s “Observe” step can feed into PDCA’s “Plan” phase by providing real-time situational awareness.

- PDCA’s structured planning can enhance OODA’s orientation process.

- Execution

- PDCA’s “Do” phase can incorporate OODA loops for quick adjustments during implementation.

- OODA’s “Act” step can trigger a new PDCA cycle for more comprehensive improvements.

- Evaluation

- PDCA’s “Check” phase can use OODA’s observation techniques for more thorough assessment.

- OODA’s rapid decision-making can inform PDCA’s “Act” phase for faster course corrections.