The Theory of Active and Latent Failures was proposed by James Reason in his book, Human Error. Reason stated accidents within most complex systems, such as health care, are caused by a breakdown or absence of safety barriers across four levels within a system. These levels can best be described as Unsafe Acts, Preconditions for Unsafe Acts, Supervisory Factors, and Organizational Influences. Reason used the term “active failures” to describe factors at the Unsafe Acts level, whereas “latent failures” was used to describe unsafe conditions higher up in the system.

This is represented as the Swiss Cheese model, and has become very popular in root cause analysis and risk management circles and widely applied beyond the safety world.

In the Swiss Cheese model, the holes in the cheese depict the failure or absence of barriers within a system. Such occurrences represent failures that threaten the overall integrity of the system. If such failures never occurred within a system (i.e., if the system were perfect), then there would not be any holes in the cheese. We would have a nice Engelberg cheddar.

Not every hole that exists in a system will lead to an error. Sometimes holes may be inconsequential. Other times, holes in the cheese may be detected and corrected before something bad happens. This process of detecting and correcting errors occurs all the time.

The holes in the cheese are dynamic, not static. They open and close over time due to many factors, allowing the system to function appropriately without catastrophe. This is what human factors engineers call “resilience.” A resilient system is one that can adapt and adjust to changes or disturbances.

Holes in the cheese open and close at different rates. The rate at which holes pop up or disappear is determined by the type of failure the hole represents.

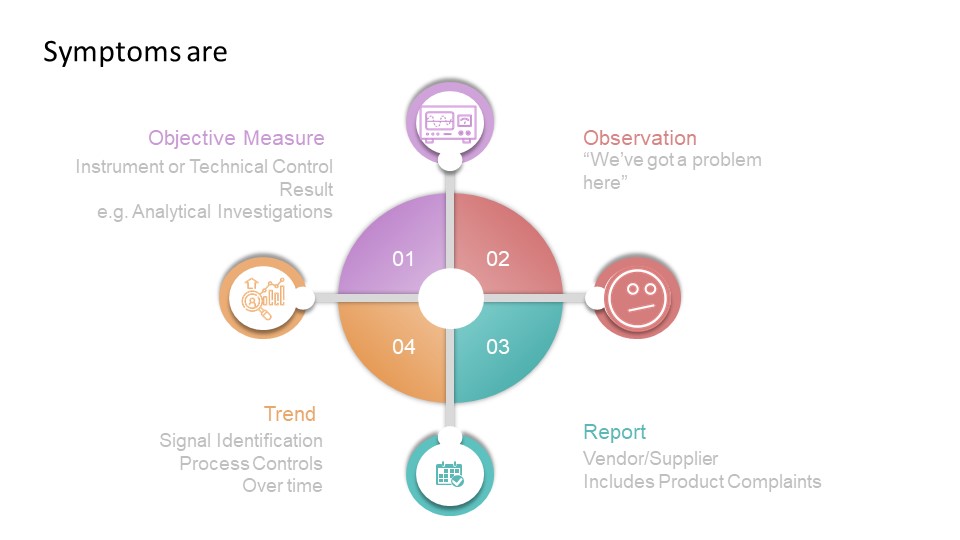

- Holes that occur at the Unsafe Acts level, and even some at the Preconditions level, represent active failures. Active failures usually occur during the activity of work and are directly linked to the bad outcome. Active failures change during the process of performing, opening, and closing over time as people make errors, catch their errors, and correct them.

- Latent failures occur higher up in the system, above the Unsafe Acts level — the Organizational, Supervisory, and Preconditions levels. These failures are referred to as “latent” because when they occur or open, they often go undetected. They can lie “dormant” or “latent” in the system for an extended period of time before they are recognized. Unlike active failures, latent failures do not close or disappear quickly.

Most events (harms) are associated with multiple active and latent failures. Unlike the typical Swiss Cheese diagram above, which shows an arrow flying through one hole at each level of the system, there can be a variety of failures at each level that interact to produce an event. In other words, there can be several failures at the Organizational, Supervisory, Preconditions, and Unsafe Acts levels that all lead to harm. The number of holes in the cheese associated with events are more frequent at the Unsafe Acts and Preconditions levels, but (usually) become fewer as one progresses upward through the Supervisory and Organizational levels.

Given the frequency and dynamic nature of activities, there are more opportunities for holes to open up at the Unsafe and Preconditions levels on a frequent basis and there are often more holes identified at these levels during root cause investigation and risk assessments.

The way the holes in the cheese interact across levels is important:

- One-to-many mapping of causal factors is when a hole at a higher level (e.g., Preconditions) may result in several holes at a lower level (e.g. Unsafe acts)

- Many-to-one mapping of causal factors when multiple holes at the higher level (e.g. preconditions) might interact to produce a single hole at the lower level (e.g. Unsafe Acts)

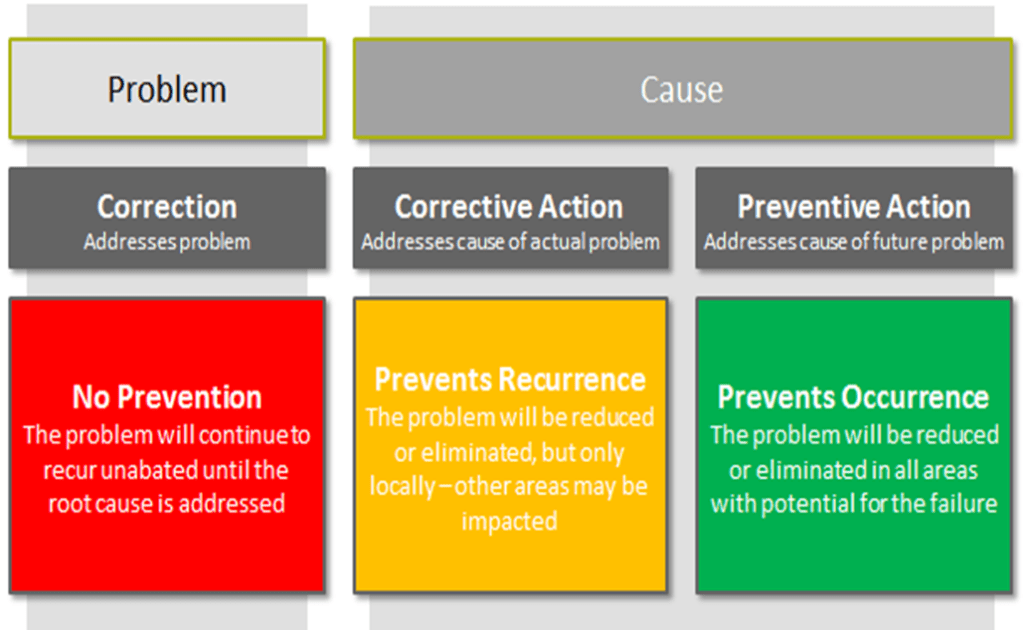

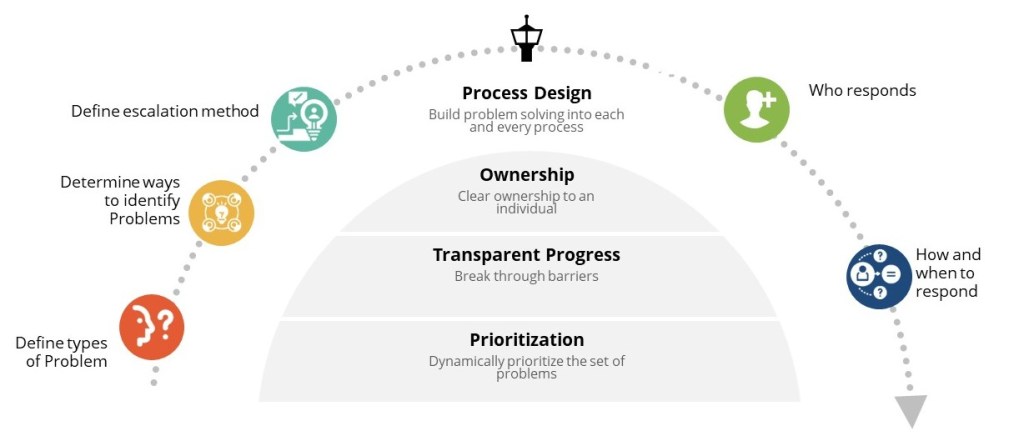

By understand the Swiss Cheese model, and Reason’s wider work in Active and Latent Failures, we can strengthen our approach to problem-solving.

Plus cheese is cool.