Quality stands in a position, sometimes uniquely in an organization, of engaging with stakeholders to understand what objectives and unique positions the organization needs to assume, and the choices that are making in order to achieve such objectives and positions.

The effectiveness of the team in making good decisions by picking the right choices depends on their ability of analyzing a problem and generating alternatives. As I discussed in my post “Design Lifecycle within PDCA – Planning” experimentation plays a critical part of the decision making process. When designing the solution we always consider:

- Always include a “do nothing” option: Not every decision or problem demands an action. Sometimes, the best way is to do nothing.

- How do you know what you think you know? This should be a question everyone is comfortable asking. It allows people to check assumptions and to question claims that, while convenient, are not based on any kind of data, firsthand knowledge, or research.

- Ask tough questions! Be direct and honest. Push hard to get to the core of what the options look like.

- Have a dissenting option. It is critical to include unpopular but reasonable options. Make sure to include opinions or choices you personally don’t like, but for which good arguments can be made. This keeps you honest and gives anyone who see the pros/cons list a chance to convince you into making a better decision than the one you might have arrived at on your own.

- Consider hybrid choices. Sometimes it’s possible to take an attribute of one choice and add it to another. Like exploratory design, there are always interesting combinations in decision making. This can explode the number of choices, which can slow things down and create more complexity than you need. Watch for the zone of indifference (options that are not perceived as making any difference or adding any value) and don’t waste time in it.

- Include all relevant perspectives. Consider if this decision impacts more than just the area the problem is identified in. How does it impact other processes? Systems?

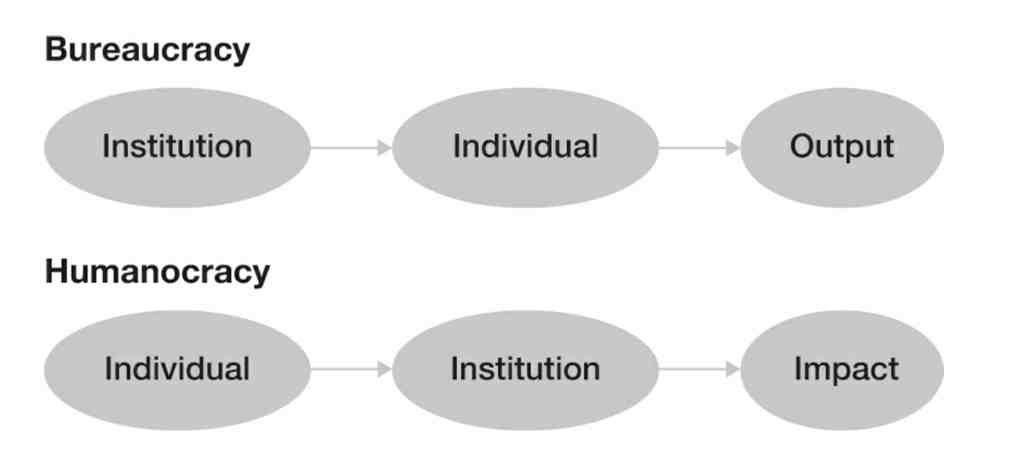

A struggle every organization has is how to think through problems in a truly innovative way. Installing new processes into an old bureaucracy will only replace one form of control with another. We need to rethink the very matter of control and what it looks like within an organization. It is not about change management, on it sown change management will just shift the patterns of the past. To truly transform we need a new way of thinking.

One of my favorite books on just how to do this is Humanocracy: Creating Organizations as Amazing as the People Inside Them by Gary Hamel and Michele Zanini. In this book, the authors advocate that business must become more fundamentally human first. The idea of human ability and how to cultivate and unleash it is an underlying premise of this book.

it’s possible to capture the benefits of bureaucracy—control, consistency, and coordination—while avoiding the penalties—inflexibility, mediocrity, and apathy.

Gary Hamel and Michele Zanini, Humanocracy, p. 15

The above quote really encapsulates the heart of this book, and why I think it is such a pivotal read for my peers. This books takes the core question of a bureaurcacy is “How do we get human beings to better serve the organization?”. The issue at the heart of humanocracy becomes: “What sort of organization elicits and merits the best that human beings can give?” Seems a simple swap, but the implications are profound.

I would hope you, like me, see the promise of many of the central tenets of Quality Management, not least Deming’s 8th point. The very real tendency of quality to devolve to pointless bureaucracy is something we should always be looking to combat.

Humanocracy’s central point is that by truly putting the employee first in our organizations we drive a human-centered organization that powers and thrives on innovation. Humanocracy is particularly relevant as organizations seek to be more resilient, agile, adaptive, innovative, customer centric etc. Leaders pursuing such goals seek to install systems like agile, devops, flexible teams etc. They will fail, because people are not processes. Resiliency, agility, efficiency, are not new programming codes for people. These goals require more than new rules or a corporate initiative. Agility, resilience, etc. are behaviors, attitudes, ways of thinking that can only work when you change the deep ‘systems and assumptions’ within an organization. This book discusses those deeper changes.

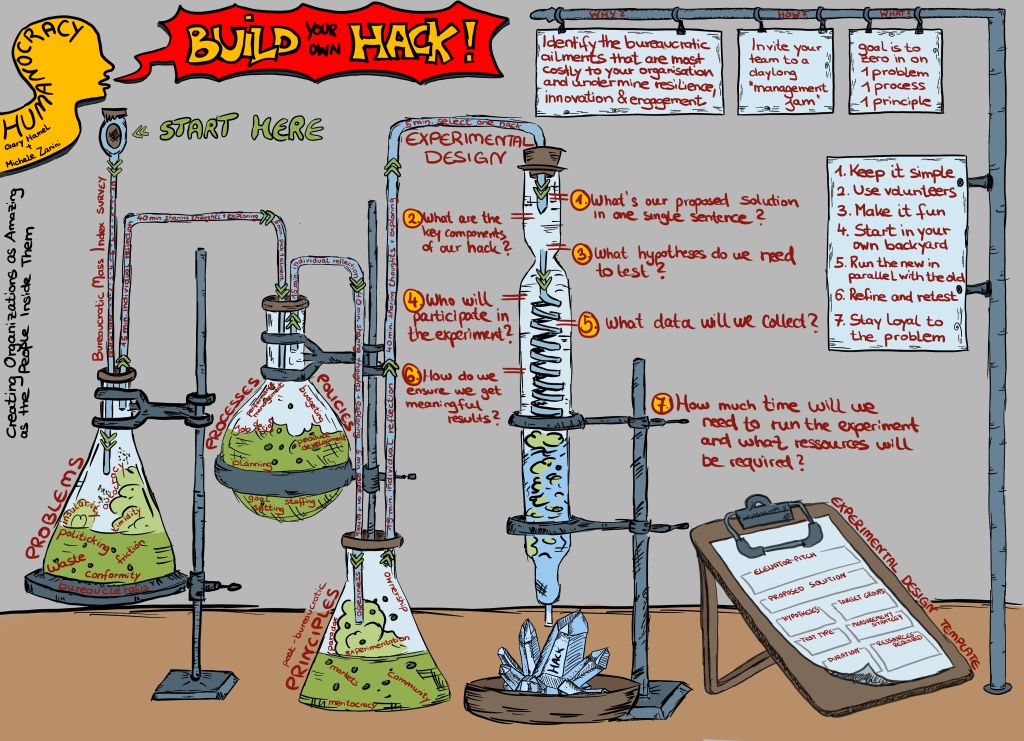

Humanocracy lays out seven tips for success in experimentation. I find they align nicely with Kotter’s 8 change accelerators.

| Humanocracy’s Tip | Kotter’s Accelerator |

| Keep it Simple | Generate (and celebrate) short-term wins |

| Use Volunteers | Enlist a volunteer army |

| Make it Fun | Sustain Acceleration |

| Start in your own backyard | Form a change vision and strategic initiatives |

| Run the new parallel with the old | Enable action by removing barriers |

| Refine and Retest | Sustain acceleration |

| Stay loyal to the problem | Create a Sense of Urgency around a Big Opportunity |