A reader asks what the expectations of the FDA are towards the “training program” and tips on changing the culture/attitude towards positive training behaviors.

I’ve answered the FDA part before in “Site Training Needs,” “Training Plan,” “CVs and JDs and Training Plans,” and “HR and Quality, joined at the hip” but I think it is a good idea to revisit the topic and look at some 483 observations.

Employees are not given training in the particular operations they perform as part of their function, current good manufacturing practices and written procedures required by current good manufacturing practice regulations.

Specifically, your firm does not have a written training program. There are no records to demonstrate (b)(6)(b. is qualified perform filling operations. I observed (b)(4)(b perform filling operation for Wart Control Extra Strength, 4 ml, lot USWCXx-4059 on 01/18/23. I observed (b (7)(C). perform reprocessing and packing operations. There are no records to demonstrate (b (7)C. is qualified perform these operations.

Employees are not given training in current good manufacturing practices and written procedures required by current good manufacturing practice regulations.

Specifically, your firm lacked training documentation for cGMP, SOPs, or specific job functions for all employees that perform analytical testing on finished OTC drug products. Additionally, your firm lacks a written procedure on employee training.

I could list a bunch more, but they all pretty much say the same.

- Have a documented training program.

- Conduct training on the operations an individual performs AND on general GxP principles appropriate to their job.

A documented training program should set out:

- Job Descriptions

- Organizational Charts

- Curriculum Vitae/Resume

- Identify Training

- Individual Learning Plans (Training Assignments)

- Training Program Execution

- Development and Management of Training Materials

- Training execution

- New Hire Orientation

- On-the-Job Training (OJT)

- Continuous Training

- Qualified Trainers

- Training Records of Personnel

- Periodic Review of Training System Performance

Conducting training starts with having a training plan to identify what appropriate training looks like.

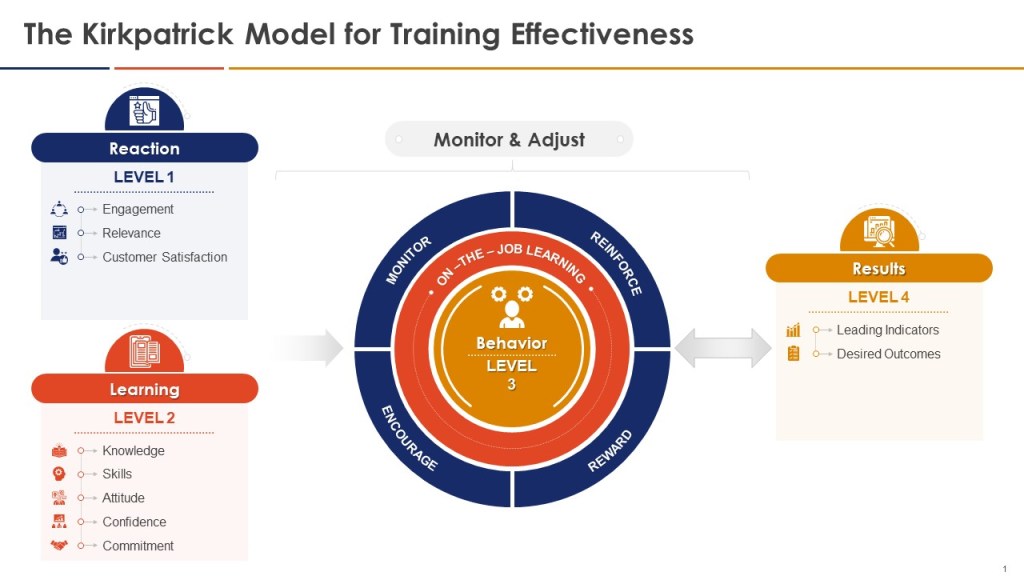

The question is always what level of training is adequate. The honest answer is whatever works, and you aren’t training and educating your personnel enough. This is one of the things were the proof is in the pudding. Build the ways to measure effectiveness of training and you will be golden.